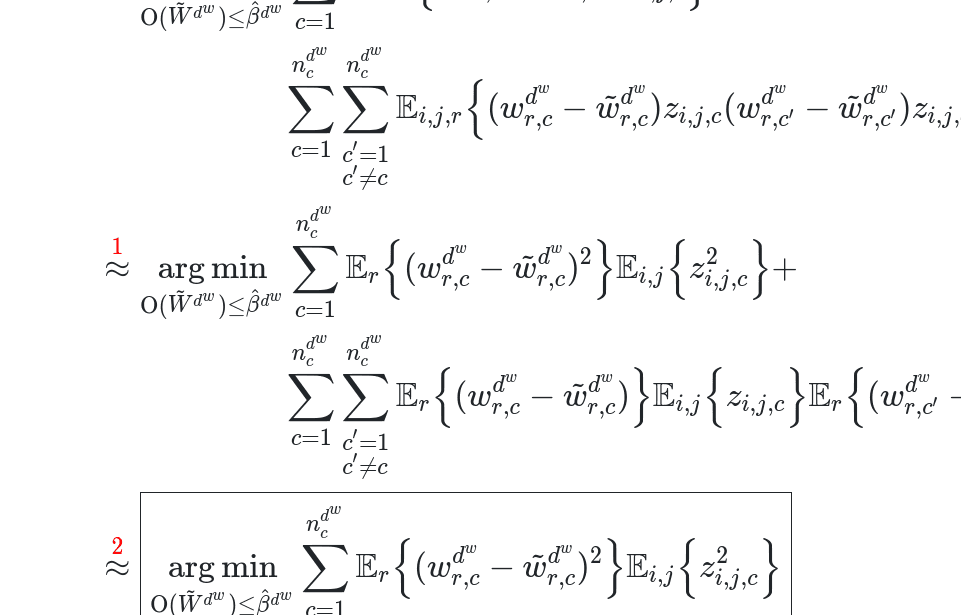

An Introduction To Llm Quantization Textmine Llm quantization offers this by converting the llm parameters to discrete values and removing llm capabilities and knowledge which are not relevant to the task the llm is being developed for. about textmine textmine is an easy to use document data extraction tool for procurement, operations, and finance teams. In this post, i will introduce the field of quantization in the context of language modeling and explore concepts one by one to develop an intuition about the field. we will explore various methodologies, use cases, and the principles behind quantization. in this visual guide, there are more than 50 custom visuals to help you develop an intuition about quantization!.

An Introduction To Llm Quantization Textmine An introduction to llm quantization — textmine large language models (llms) are formed of billions of parameters which have been trained on vast quantities of data. The trade offs to quantization and how we can benchmark them. and, the practical limits to quantization. the basics of quantization at a high level, quantization simply involves taking a model parameter, which for the most part means the model's weights, and converting it to a lower precision floating point or integer value. Introduction to quantization by maxime labonne: overview of quantization, absmax and zero point quantization, and llm.int8 () with code. quantize llama models with llama.cpp by maxime labonne: tutorial on how to quantize a llama 2 model using llama.cpp and the gguf format. Introduction to the quantization of llms similar to our clock example from the introduction, quantization in llms is a technique used to reduce the accuracy of ai models to a relevant level by reducing the accuracy of the parameters (mainly the weights).

An Introduction To Llm Quantization Textmine Introduction to quantization by maxime labonne: overview of quantization, absmax and zero point quantization, and llm.int8 () with code. quantize llama models with llama.cpp by maxime labonne: tutorial on how to quantize a llama 2 model using llama.cpp and the gguf format. Introduction to the quantization of llms similar to our clock example from the introduction, quantization in llms is a technique used to reduce the accuracy of ai models to a relevant level by reducing the accuracy of the parameters (mainly the weights). Introduction to quantization whether you’re an ai enthusiast looking to run large language models (llms) on your personal device, a startup aiming to serve state of the art models efficiently, or a researcher fine tuning models for specific tasks, quantization is a key technique to understand. quantization can be broadly categorized into two main approaches: quantization aware training (qat. Learn 5 key llm quantization techniques to reduce model size and improve inference speed without significant accuracy loss. includes technical details and code snippets for engineers.

Llm Quantization An Introduction To Quantization Techniques Introduction to quantization whether you’re an ai enthusiast looking to run large language models (llms) on your personal device, a startup aiming to serve state of the art models efficiently, or a researcher fine tuning models for specific tasks, quantization is a key technique to understand. quantization can be broadly categorized into two main approaches: quantization aware training (qat. Learn 5 key llm quantization techniques to reduce model size and improve inference speed without significant accuracy loss. includes technical details and code snippets for engineers.

Picollm Towards Optimal Llm Quantization

Ultimate Guide To Llm Quantization For Faster Leaner Ai Models

Llm Quantization Review