Cpu Vs Gpu Cuda Segmentation Results Difference Pytorch Forums Hello pytorch community, i am inferring a pre trained model trained on some segmentation task. if i evaluate cuda (gpu) (on my gpus cluster), i get different results than if i do it on my local machine on windows cpu. here are some visual results; i found two reasons behind this, but the visual results should vary that much; not sure. floating point precisions difference in the execution order. I am trying to figure out the rounding difference between numpy pytorch, gpu cpu, float16 float32 numbers and what i'm finding confuses me. the basic version is: a = torch.rand(3, 4, dtype=torch.fl.

C Opencv Cuda Different Outcomes On Cpu Vs Gpu Stack Overflow This article explains the basic differences between performing tensor operations using cpu and gpu. code snippets in pytorch are also included to support the explanation. You can follow pytorch’s “transfer learning tutorial” and play with larger networks like change torchvision.models.resent18 to resent101 or whichever network that fits your gpu. you can toggle between cpu or cuda and easily see the jump in speed. In this case it seems you code might just be sensitive to the random seed and assuming you are sampling on the cpu vs. gpu the effect might be comparable to using different seeds due to the difference in the prng implementations. Recommended: driver updater update drivers automatically. trusted by millions → pytorch cpu vs. gpu: understanding different results pytorch, an open source machine learning library, has gained immense popularity within the ai and data science communities due to its flexibility, ease of use, and dynamic computation graph.

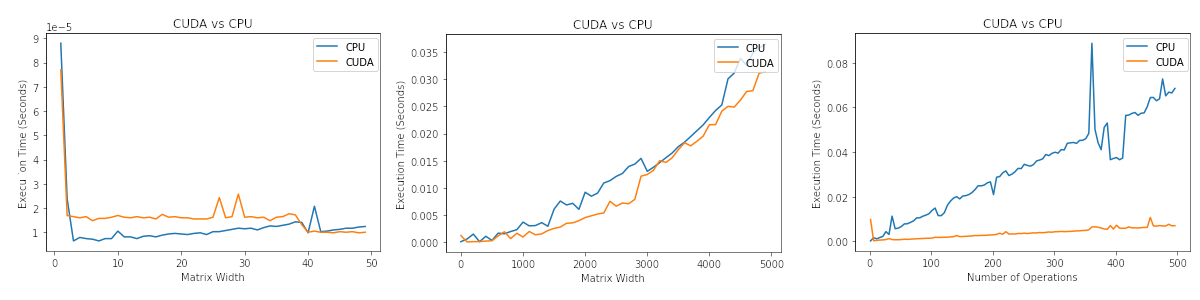

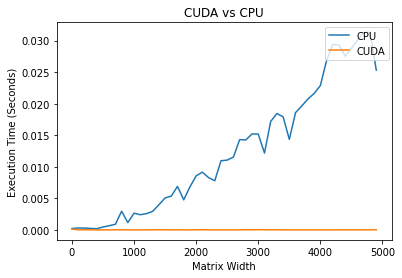

Gpu With Cuda Vs Cpu 3 Download Scientific Diagram In this case it seems you code might just be sensitive to the random seed and assuming you are sampling on the cpu vs. gpu the effect might be comparable to using different seeds due to the difference in the prng implementations. Recommended: driver updater update drivers automatically. trusted by millions → pytorch cpu vs. gpu: understanding different results pytorch, an open source machine learning library, has gained immense popularity within the ai and data science communities due to its flexibility, ease of use, and dynamic computation graph. I happened in the same program, using the same model, switching between the gpu and the cpu, and the results were different. my libtorch version is libtorch1.2.0 gpu. A profiling comparison between cpu and gpu performance when normalizing images in pytorch. there are several factors to consider when optimizing preprocessing pipelines, such as data types, data transfer, and parallel processing capabilities. this posts explores these factors and provides insights on how to optimize your data pipeline.

Jrtechs Cuda Vs Cpu Performance I happened in the same program, using the same model, switching between the gpu and the cpu, and the results were different. my libtorch version is libtorch1.2.0 gpu. A profiling comparison between cpu and gpu performance when normalizing images in pytorch. there are several factors to consider when optimizing preprocessing pipelines, such as data types, data transfer, and parallel processing capabilities. this posts explores these factors and provides insights on how to optimize your data pipeline.

Jrtechs Cuda Vs Cpu Performance