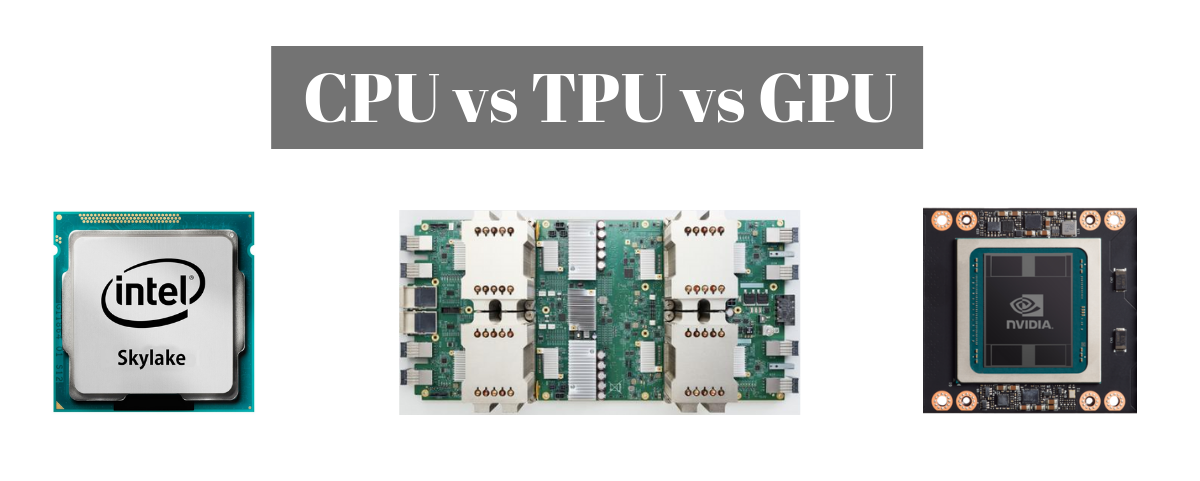

Performance Ysis And Cpu Vs Gpu Comparison For Deep Learning Various deep learning frameworks, including tensorflow, pytorch, and others, depend on cuda for gpu support and cudnn for deep neural network computations. performance gains are shared across frameworks when these underlying technologies improve, but differences in scalability to multiple gpus and nodes exist among frameworks. Gpu are always the center of attention when it comes to training deep learning models, but if you only have access to a cpu, how much would you save in time if you upgrade to a gpu, nvidia has.

Cpu Vs Gpu Cuda Segmentation Results Difference Pytorch Forums In conclusion, the demonstration vividly illustrates the substantial difference in training speed between cpu and gpu when utilizing tensorflow for deep learning tasks. the gpu accelerated training significantly outperforms cpu based training, showcasing the importance of leveraging gpu capabilities for expediting the ai model training life cycle. Cpu vs gpu beyond cores: memory bandwidth — the unsung hero another factor tipping the scales in favor of gpus is memory bandwidth. deep learning models often require processing large and. Amd gpu for deep learning? hello everyone, i'm in the process of planning a new pc build, primarily for gaming and general use. however, i'm also keen on exploring deep learning, ai, and text to image applications. it seems the nvidia gpus, especially those supporting cuda, are the standard choice for these tasks. How much faster is gpu than cpu for deep learning? deep learning has transformed a plethora of industries, from healthcare and finance to entertainment and autonomous systems. at the heart of this transformation lies the power of specialized hardware—most notably, graphics processing units (gpus) and central processing units (cpus).

Gpu Vs Cpu In Deep Learning Gpu Vs Cpu By Deepak Medium Amd gpu for deep learning? hello everyone, i'm in the process of planning a new pc build, primarily for gaming and general use. however, i'm also keen on exploring deep learning, ai, and text to image applications. it seems the nvidia gpus, especially those supporting cuda, are the standard choice for these tasks. How much faster is gpu than cpu for deep learning? deep learning has transformed a plethora of industries, from healthcare and finance to entertainment and autonomous systems. at the heart of this transformation lies the power of specialized hardware—most notably, graphics processing units (gpus) and central processing units (cpus). Cpu vs. gpu for machine learning compared to general purpose central processing units (cpus), powerful graphics processing units (gpus) are typically preferred for demanding artificial intelligence (ai) applications such as machine learning (ml), deep learning (dl) and neural networks. Time to put that graphics powerhouse to work! why gpu acceleration matters for ollama running ai models on cpu feels like hauling cargo with a bicycle when you own a freight truck. installing ollama with nvidia gpu support transforms your machine learning experience from sluggish to lightning fast.

Deep Learning Model Performance Gpu Vs Cpu Cpu vs. gpu for machine learning compared to general purpose central processing units (cpus), powerful graphics processing units (gpus) are typically preferred for demanding artificial intelligence (ai) applications such as machine learning (ml), deep learning (dl) and neural networks. Time to put that graphics powerhouse to work! why gpu acceleration matters for ollama running ai models on cpu feels like hauling cargo with a bicycle when you own a freight truck. installing ollama with nvidia gpu support transforms your machine learning experience from sluggish to lightning fast.