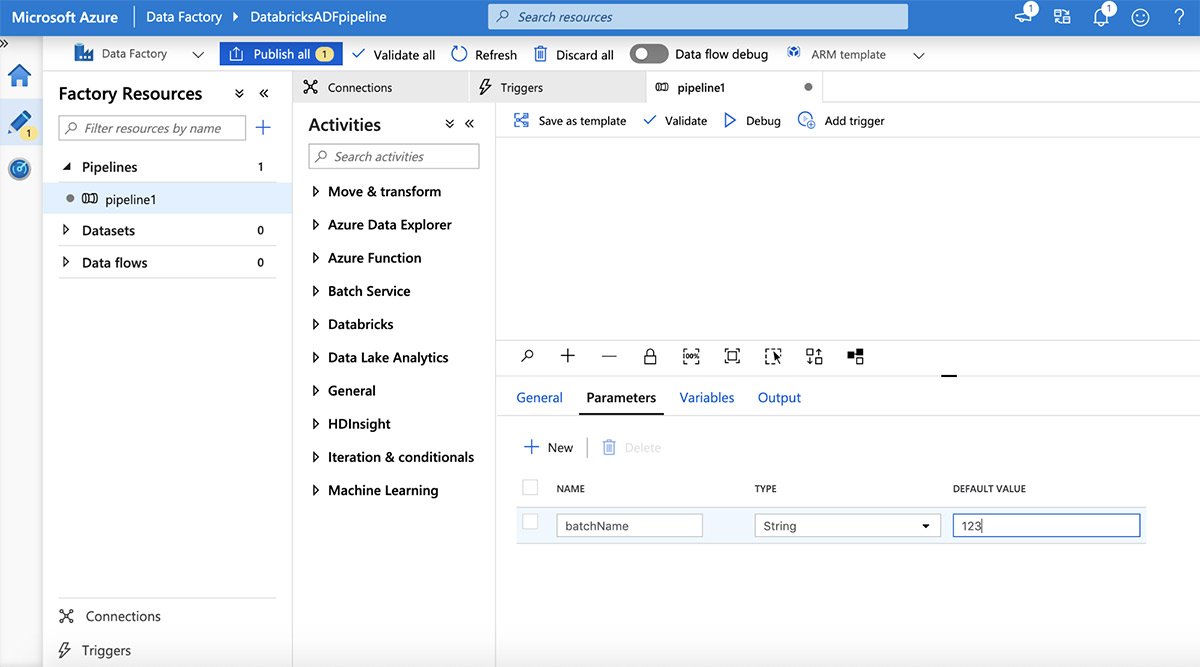

Data Engineering Azure Data Factory Pipelines Data Bricks Etl Create etl pipelines for batch and streaming data with azure databricks to simplify data lake ingestion at any scale. Azure data factory provides data transformation capabilities through its data flow feature, which allows users to perform various transformations directly within the pipeline. while powerful, these transformations are generally more suited to etl processes and may not be as extensive or flexible as those offered by databricks.

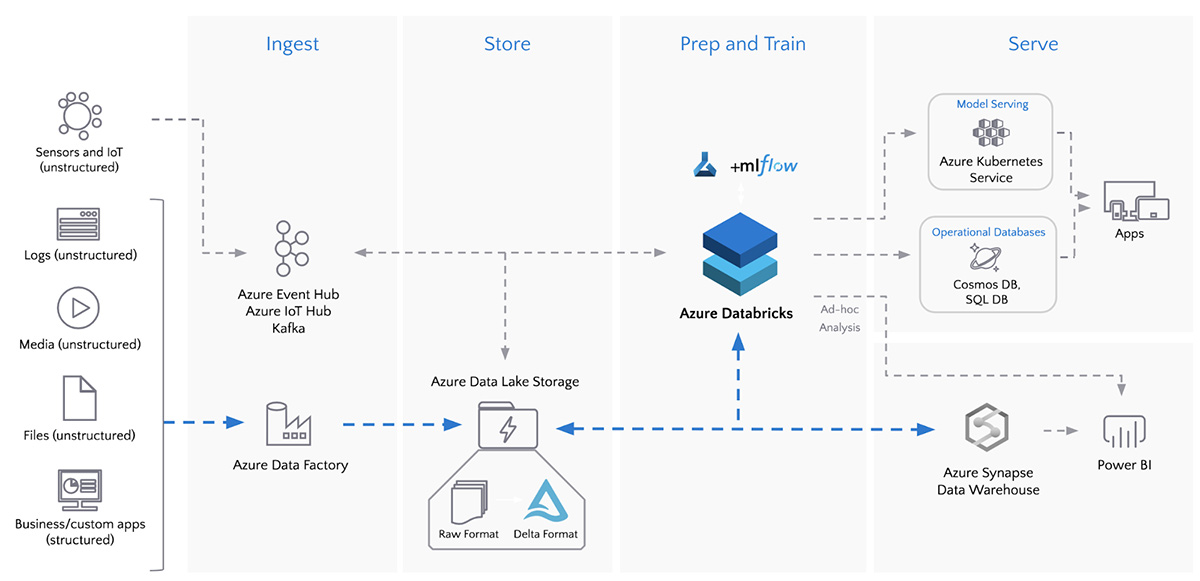

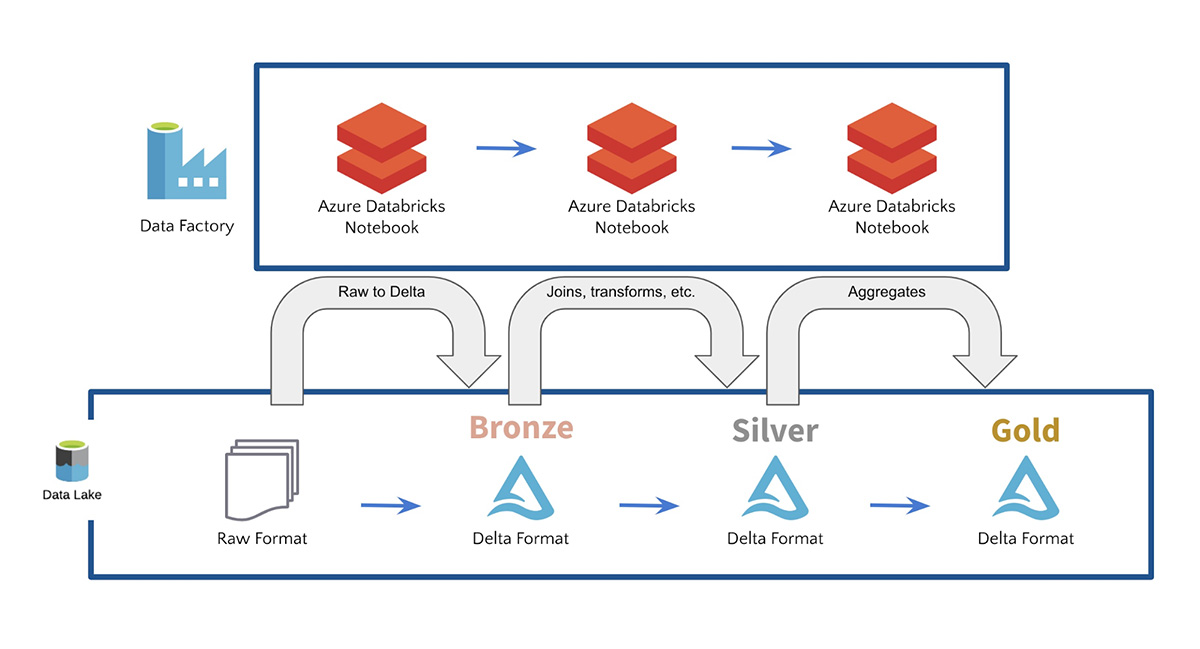

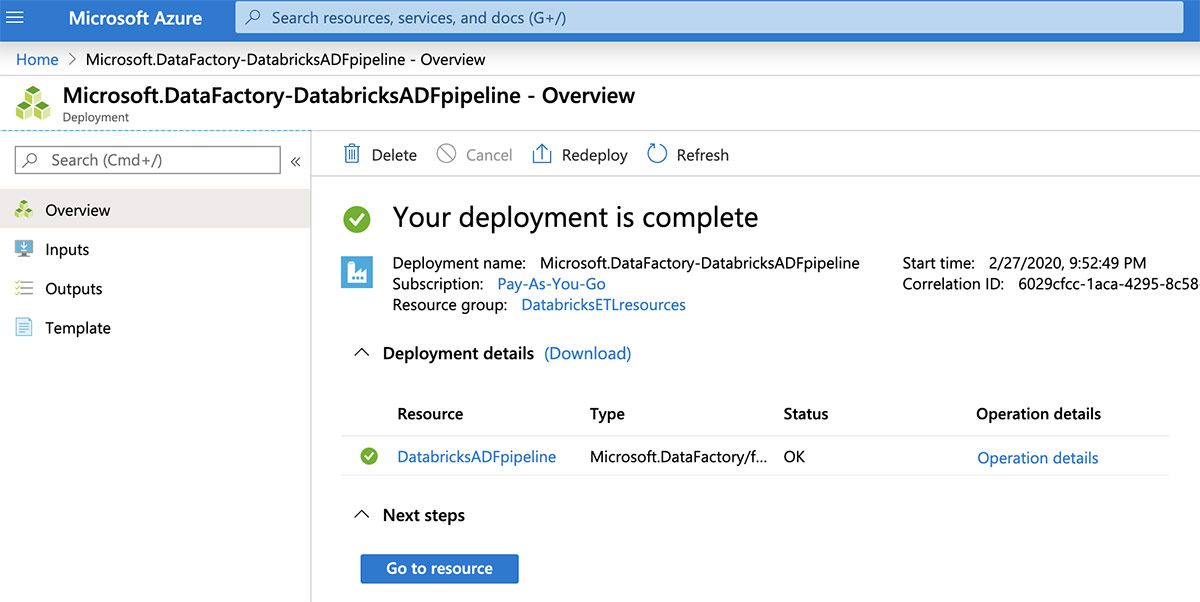

Connect 90 Data Sources To Data Lake Databricks Blog In this pipeline building project, the monthly raw sales data was ingested manually into the data lake at the end of each month. first, need to create a storage account in azure so that we can. The goal is to create an azure solution which can take an on premise database such as the microsoft sql server management system (ssms) and move it to the cloud. it does so by building an etl pipeline using azure data factory, azure databricks and azure synapse analytics. this solution can be. With its integration with azure services like data factory and databricks, organizations can seamlessly ingest, transform, and analyze data at scale. in the subsequent sections, we’ll delve into the process of building an end to end etl pipeline using these azure services, from data ingestion to analytics and decision making. Planning to build etl pipelines to process large scale data? this course will teach you to build, run, optimize, and automate etl pipelines with azure databricks and azure data factory, while using delta lake for reliable storage and query performance.

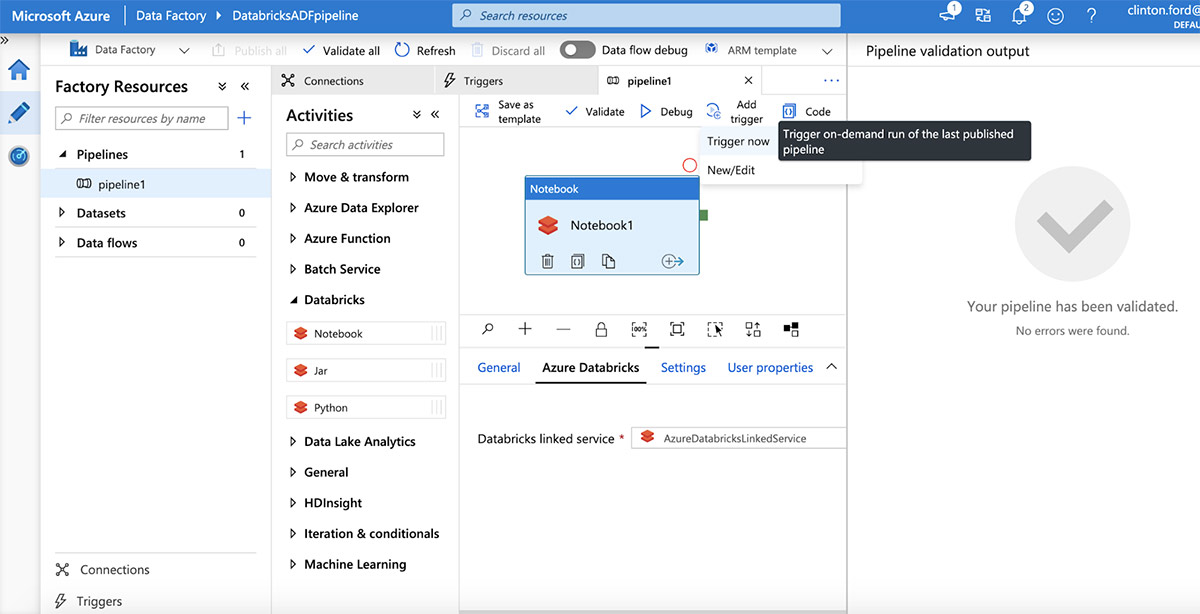

Connect 90 Data Sources To Data Lake Databricks Blog With its integration with azure services like data factory and databricks, organizations can seamlessly ingest, transform, and analyze data at scale. in the subsequent sections, we’ll delve into the process of building an end to end etl pipeline using these azure services, from data ingestion to analytics and decision making. Planning to build etl pipelines to process large scale data? this course will teach you to build, run, optimize, and automate etl pipelines with azure databricks and azure data factory, while using delta lake for reliable storage and query performance. The databricks job activity in azure data factory is the recommended method for orchestrating jobs in databricks. this integration provides immediate business value and cost savings through providing access to the entire data intelligence platform. users with etl frameworks using notebook activities should migrate to databricks workflows and the adf databricks job activity. This post was authored by leo furlong, a solutions architect at databricks. azure data factory (adf), synapse pipelines, and azure databricks make a rock solid combo for building your lakehouse on azure data lake storage gen2 (adls gen2). adf provides the capability to natively ingest data to the azure cloud from over 100 different data sources. adf also provides graphical data orchestration.

Connect 90 Data Sources To Data Lake Databricks Blog The databricks job activity in azure data factory is the recommended method for orchestrating jobs in databricks. this integration provides immediate business value and cost savings through providing access to the entire data intelligence platform. users with etl frameworks using notebook activities should migrate to databricks workflows and the adf databricks job activity. This post was authored by leo furlong, a solutions architect at databricks. azure data factory (adf), synapse pipelines, and azure databricks make a rock solid combo for building your lakehouse on azure data lake storage gen2 (adls gen2). adf provides the capability to natively ingest data to the azure cloud from over 100 different data sources. adf also provides graphical data orchestration.

Connect 90 Data Sources To Data Lake Databricks Blog

Connect 90 Data Sources To Data Lake Databricks Blog