Databricks Takes Apache Spark To The Cloud Nabs 33m First, install the databricks python sdk and configure authentication per the docs here. pip install databricks sdk then you can use the approach below to print out secret values. because the code doesn't run in databricks, the secret values aren't redacted. for my particular use case, i wanted to print values for all secrets in a given scope. I'm trying to connect from a databricks notebook to an azure sql datawarehouse using the pyodbc python library. when i execute the code i get this error: error: ('01000', "[01000] [unixodbc][driver.

Databricks Takes Apache Spark To The Cloud Nabs 33m Are there any method to write spark dataframe directly to xls xlsx format ???? most of the example in the web showing there is example for panda dataframes. but i would like to use spark datafr. I am able to execute a simple sql statement using pyspark in azure databricks but i want to execute a stored procedure instead. below is the pyspark code i tried. #initialize pyspark import findsp. The datalake is hooked to azure databricks. the requirement asks that the azure databricks is to be connected to a c# application to be able to run queries and get the result all from the c# application. the way we are currently tackling the problem is that we have created a workspace on databricks with a number of queries that need to be executed. I am trying to convert a sql stored procedure to databricks notebook. in the stored procedure below 2 statements are to be implemented. here the tables 1 and 2 are delta lake tables in databricks c.

Getting Started With Apache Spark On Databricks Databricks The datalake is hooked to azure databricks. the requirement asks that the azure databricks is to be connected to a c# application to be able to run queries and get the result all from the c# application. the way we are currently tackling the problem is that we have created a workspace on databricks with a number of queries that need to be executed. I am trying to convert a sql stored procedure to databricks notebook. in the stored procedure below 2 statements are to be implemented. here the tables 1 and 2 are delta lake tables in databricks c. I have connected a github repository to my databricks workspace, and am trying to import a module that's in this repo into a notebook also within the repo. the structure is as such: repo name chec. There is a lot of confusion wrt the use of parameters in sql, but i see databricks has started harmonizing heavily (for example, 3 months back, identifier () didn't work with catalog, now it does). check my answer for a working solution.

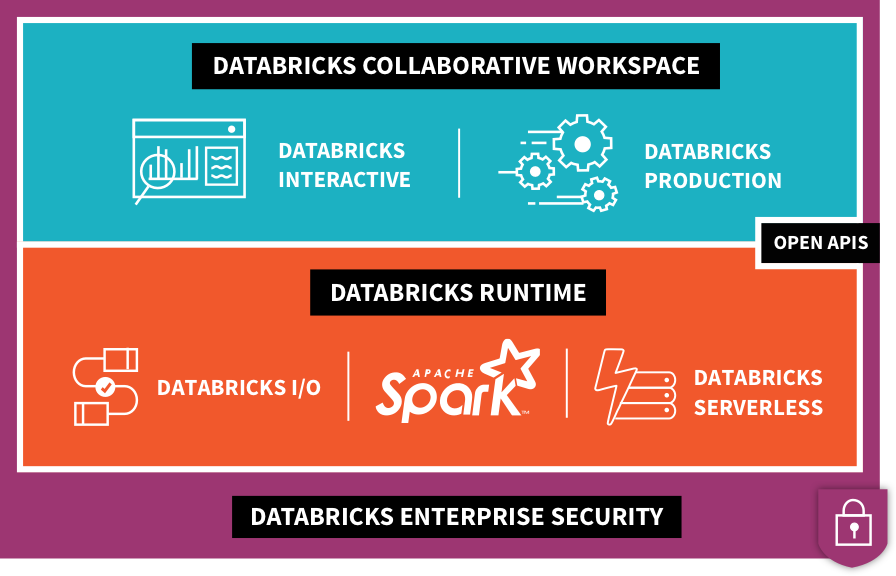

Comparing Databricks To Apache Spark Databricks I have connected a github repository to my databricks workspace, and am trying to import a module that's in this repo into a notebook also within the repo. the structure is as such: repo name chec. There is a lot of confusion wrt the use of parameters in sql, but i see databricks has started harmonizing heavily (for example, 3 months back, identifier () didn't work with catalog, now it does). check my answer for a working solution.

An Introduction To Databricks Apache Spark

Beginning Apache Spark Using Azure Databricks Expert Training

How To Start Big Data With Apache Spark Simple Talk