Quantization In The Context Of Deep Learning And Neural Networks Downsizing neural networks by quantization introduction to deep learning neural network console 40.2k subscribers subscribe. Quantization is an optimization technique aimed at reducing the computational load and memory footprint of neural networks without significantly impacting model accuracy. it involves converting a model’s high precision floating point numbers into lower precision representations such as integers, which results in faster inference times, lower energy consumption, and reduced storage.

Convolutional Neural Networks Quantization With Attention Deepai Introduction to quantization quantization is a technique used to optimize deep learning models by reducing their precision from floating point numbers to integers. this reduction in precision leads to a decrease in model size and computational requirements, making it an essential step for deploying models on edge devices or in environments where computational resources are limited. definition. Neural network quantization is one of the most effective ways of achieving these savings but the additional noise it induces can lead to accuracy degradation. in this white paper, we introduce state of the art algorithms for mitigating the impact of quantization noise on the network's performance while maintaining low bit weights and activations. People have been using static symmetric linear quantization for the convolution operations in neural networks for a long time and it has been very successful for lots of use cases so far. Reducing the size of deep learning models with 8 bit quantization image by author q uantization is a technique used to reduce the size and memory footprint of neural network models.

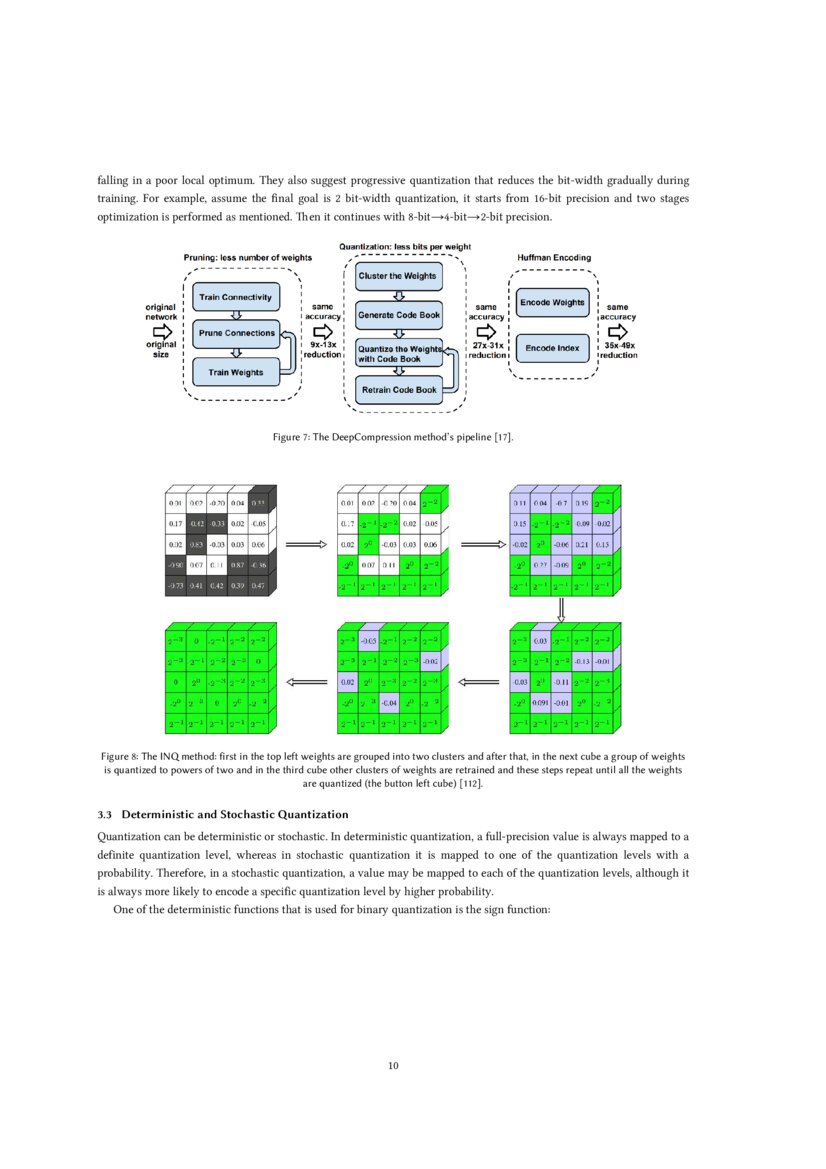

Learning Accurate Low Bit Deep Neural Networks With Stochastic People have been using static symmetric linear quantization for the convolution operations in neural networks for a long time and it has been very successful for lots of use cases so far. Reducing the size of deep learning models with 8 bit quantization image by author q uantization is a technique used to reduce the size and memory footprint of neural network models. Before starting the quantization workflow, consider using the structural compression techniques of pruning and projection. for more information on compression techniques in the deep learning toolbox model compression library, see reduce memory footprint of deep neural networks. Since 2021, nvidia’s tensorrt has included a quantization toolkit to perform both qat and quantized inference with 8 bit model weights. for a more in depth discussion of the principles of quantizing neural networks, refer to the 2018 whitepaper quantizing deep convolutional networks for efficient inference, by krishnamoorthi.

A Comprehensive Survey On Model Quantization For Deep Neural Networks Before starting the quantization workflow, consider using the structural compression techniques of pruning and projection. for more information on compression techniques in the deep learning toolbox model compression library, see reduce memory footprint of deep neural networks. Since 2021, nvidia’s tensorrt has included a quantization toolkit to perform both qat and quantized inference with 8 bit model weights. for a more in depth discussion of the principles of quantizing neural networks, refer to the 2018 whitepaper quantizing deep convolutional networks for efficient inference, by krishnamoorthi.