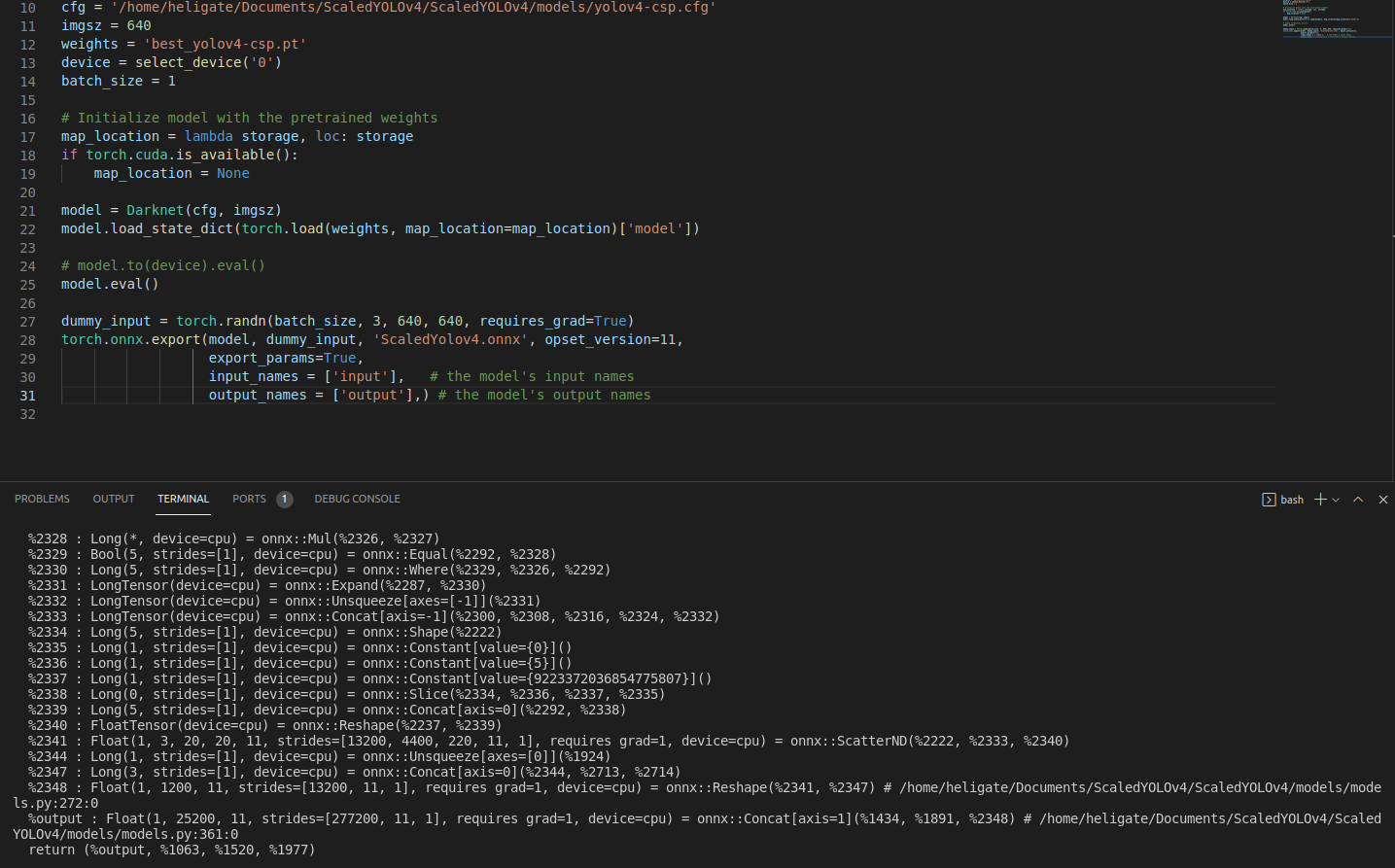

Help Torch Onnx Export Can Not Export Onnx Model Deployment Export tensorflow lite model don't use onnx model input names and output names #1047 open linmeimei0512 opened this issue on oct 27, 2022 · 1 comment. The best practice to convert the model from pytorch to onnx is that you should add the following parameters to specify the names of the input and output layer of your model in torch.onnx.export () function.

Bug Report Keyerror While Splitting Certain Onnx Model Using Utils See the python api reference for full documentation. savedmodel convert a tensorflow saved model with the command: python m tf2onnx.convert saved model path to savedmodel output dst path model.onnx opset 13 path to savedmodel should be the path to the directory containing saved model.pb see the cli reference for full documentation. tflite tf2onnx has support for converting tflite models. This notebook demonstrates the conversion process from an .onnx model (exported from matlab) to a .tflite model (to be used within tensorflow lite, on an android or ios device.) in addition to conversion, this notebook contains cells for running inference using a set of test images to validate that predictions remain consistent across converted models. note: tensorflow's api is constantly. For future model versatility, it would be a good idea to consider moving to torch.onnx.dynamo export at an early stage. google ai edge torch ai edge torch is a python library that supports converting pytorch models into a .tflite format, which can then be run with tensorflow lite and mediapipe. Here we have used mnist dataset and built a model using two linear layers. the model is trained, optimized, loss is calculated. then we provide dummy input so that the model can understand the type of data that will be processed when it will be loaded again. finally we export the model to onnx format along with additional arguments and operators.

Onnx Model Running Error On Tensorflow Issue 1697 Onnx Onnx Github For future model versatility, it would be a good idea to consider moving to torch.onnx.dynamo export at an early stage. google ai edge torch ai edge torch is a python library that supports converting pytorch models into a .tflite format, which can then be run with tensorflow lite and mediapipe. Here we have used mnist dataset and built a model using two linear layers. the model is trained, optimized, loss is calculated. then we provide dummy input so that the model can understand the type of data that will be processed when it will be loaded again. finally we export the model to onnx format along with additional arguments and operators. Also, since tensorflow cannot control the output order of input ops and output ops, it can happen that the order of written op names is reversed in some situations. this feature is disabled by default because of the uncertainty involved due to issues with the tensorflow specification. Export tensorflow lite model don't use onnx model input names and output names error converting onnx to tf file: cannot take the read more >.

Trtexec Ignores Inputioformat With Onnx Model Tensorrt Nvidia Also, since tensorflow cannot control the output order of input ops and output ops, it can happen that the order of written op names is reversed in some situations. this feature is disabled by default because of the uncertainty involved due to issues with the tensorflow specification. Export tensorflow lite model don't use onnx model input names and output names error converting onnx to tf file: cannot take the read more >.

Duplicated Output Name In Onnx Model Issue 1324 Onnx Tensorflow