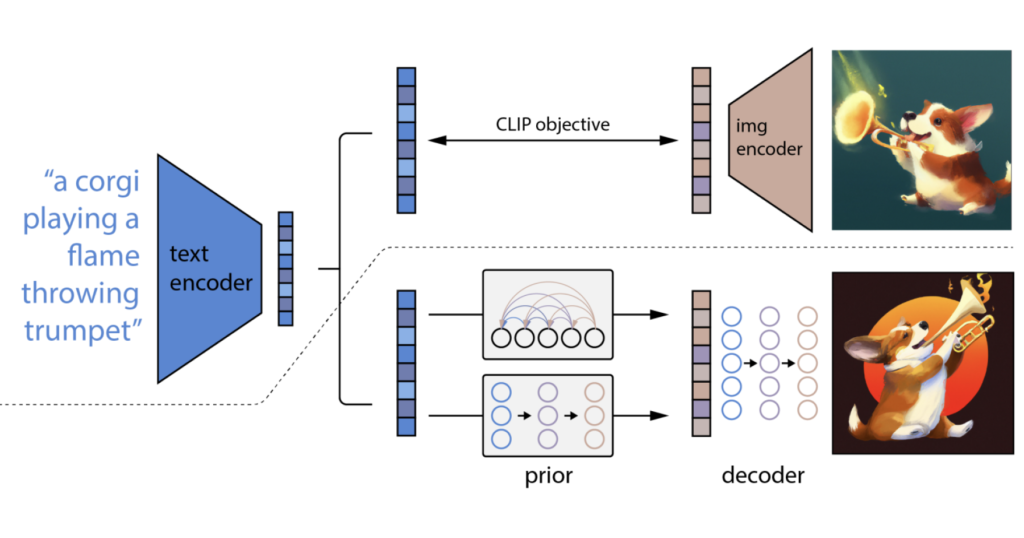

From Dall E To Stable Diffusion How Do Text To Image Generation Models Take a peek at how diffusion works for generating images. we explain exactly where are the differences between dall·e, dall·e 2, imagen and stable diffusion. Diffusion models, including glide, dalle 2, imagen, and stable diffusion, have spearheaded recent advances in ai based image generation, taking the world of “ ai art generation ” by storm. generating high quality images from text descriptions is a challenging task. it requires a deep understanding of the underlying meaning of the text and the ability to generate an image consistent with.

Conditional Text Image Generation With Diffusion Models Deepai Stable diffusion and dall·e 3 are key tools in text to image ai generation. we'll compare these models to determine which one excels in producing accurate and detailed images from text prompts. this comparison will help identify the strengths and limitations of each model in various applications. Once the text encoder is trained, it can be used to teach the stable diffusion model to understand new concepts. to do this, a small number of example images are selected that represent the new. Comparing dalle 3 and stable diffusion an analysis on real life examples of two leading ai text to image models using writingmate ai split screen. Stable diffusion is a text to image model that uses a frozen clip vit l 14 text encoder to tune the model at text prompts. it separates the imaging process into a “diffusion” process at runtime it starts with only noise and gradually improves the image until it is entirely free of noise, progressively approaching the provided text description.

Text Guided Image Generation With Diffusion Models Hands On Comparing dalle 3 and stable diffusion an analysis on real life examples of two leading ai text to image models using writingmate ai split screen. Stable diffusion is a text to image model that uses a frozen clip vit l 14 text encoder to tune the model at text prompts. it separates the imaging process into a “diffusion” process at runtime it starts with only noise and gradually improves the image until it is entirely free of noise, progressively approaching the provided text description. Stable diffusion stable diffusion has been developed by stability ai. it is a premier open source text to image generator that combines diffusion models to generate detailed and varied images from text descriptions. stable diffusion generates diverse visual outputs, including realistic photographs and abstract artwork. Figure 2: representive works on text to image task over time. the gan based methods, autoregressive methods, and diffusion based methods are masked in yellow, blue and red, respectively. we abbreviate stable diffusion as sd for brevity in this figure. as diffusion based models have achieved unprecedented success in image generation, this work mainly discusses the pioneering studies for text to.

Ai Art Generator Dall E 2 Vs Stable Diffusion Simplified Stable diffusion stable diffusion has been developed by stability ai. it is a premier open source text to image generator that combines diffusion models to generate detailed and varied images from text descriptions. stable diffusion generates diverse visual outputs, including realistic photographs and abstract artwork. Figure 2: representive works on text to image task over time. the gan based methods, autoregressive methods, and diffusion based methods are masked in yellow, blue and red, respectively. we abbreviate stable diffusion as sd for brevity in this figure. as diffusion based models have achieved unprecedented success in image generation, this work mainly discusses the pioneering studies for text to.

Github Pandluruyuvaraj Text To Image Generation Using Stable