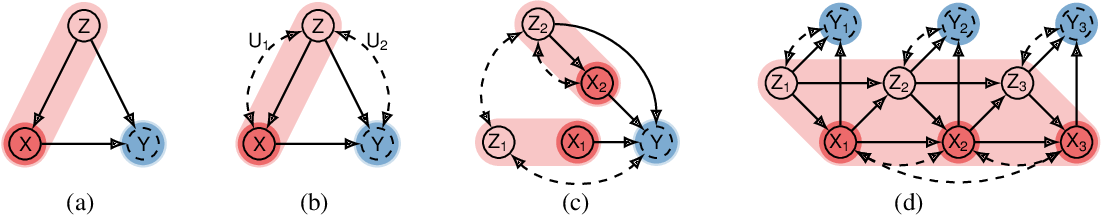

Figure 1 From Causal Imitation Learning Via Inverse Reinforcement In person poster presentation poster accept causal imitation learning via inverse reinforcement learning kangrui ruan · junzhe zhang · xuan di · elias bareinboim mh1 2 3 4 #108 keywords: [ causal inference ] [ graphical models ] [ probabilistic methods ]. Abstract one of the most common ways children learn when unfamiliar with the environment is by mimicking adults. imitation learning concerns an imitator learning to behave in an unknown environment from an expert’s demonstration; reward signals remain latent to the imitator. this paper studies imitation learning through causal lenses and extends the analysis and tools developed for behavior.

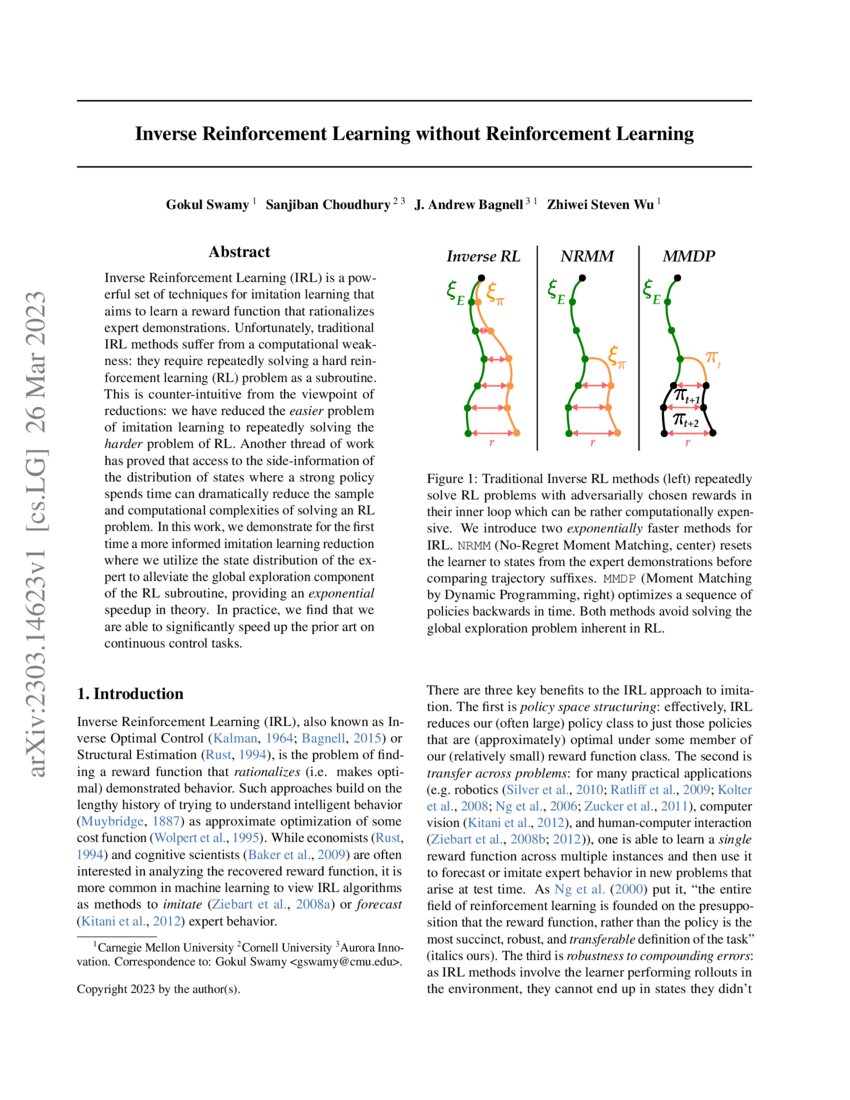

Inverse Reinforcement Learning Without Reinforcement Learning Deepai Bibliographic details on causal imitation learning via inverse reinforcement learning. This paper studies imitation learning through causal lenses and extends the analysis and tools developed for behavior cloning (zhang, kumor, bareinboim, 2020) to inverse reinforcement learning. This paper studies imitation learning through causal lenses and extends the analysis and tools developed for behavior cloning (zhang, kumor, bareinboim, 2020) to inverse reinforcement learning. 2023 01 our paper on "causal imitation learning via inverse reinforcement learning" got published to iclr 2023 2022 08 our paper on decentralized traffic signal control got published to transportation research part c.

Robust Imitation Via Mirror Descent Inverse Reinforcement Learning Deepai This paper studies imitation learning through causal lenses and extends the analysis and tools developed for behavior cloning (zhang, kumor, bareinboim, 2020) to inverse reinforcement learning. 2023 01 our paper on "causal imitation learning via inverse reinforcement learning" got published to iclr 2023 2022 08 our paper on decentralized traffic signal control got published to transportation research part c. Offline reinforcement learning via high fidelity generative behavior modeling offline reinforcement learning with differentiable function approximation is provably efficient. Traditional imitation learning assumes that the expert’s input observations match those available to the imitator tools from causal inference can account for unobserved confounders in the expert’s demonstrations authors’ goal: enhance existing irl methods to be robust against confounding bias through causal knowledge augmentation.

Inverse Reinforcement Learning Applied Artificial Intelligence Group Offline reinforcement learning via high fidelity generative behavior modeling offline reinforcement learning with differentiable function approximation is provably efficient. Traditional imitation learning assumes that the expert’s input observations match those available to the imitator tools from causal inference can account for unobserved confounders in the expert’s demonstrations authors’ goal: enhance existing irl methods to be robust against confounding bias through causal knowledge augmentation.