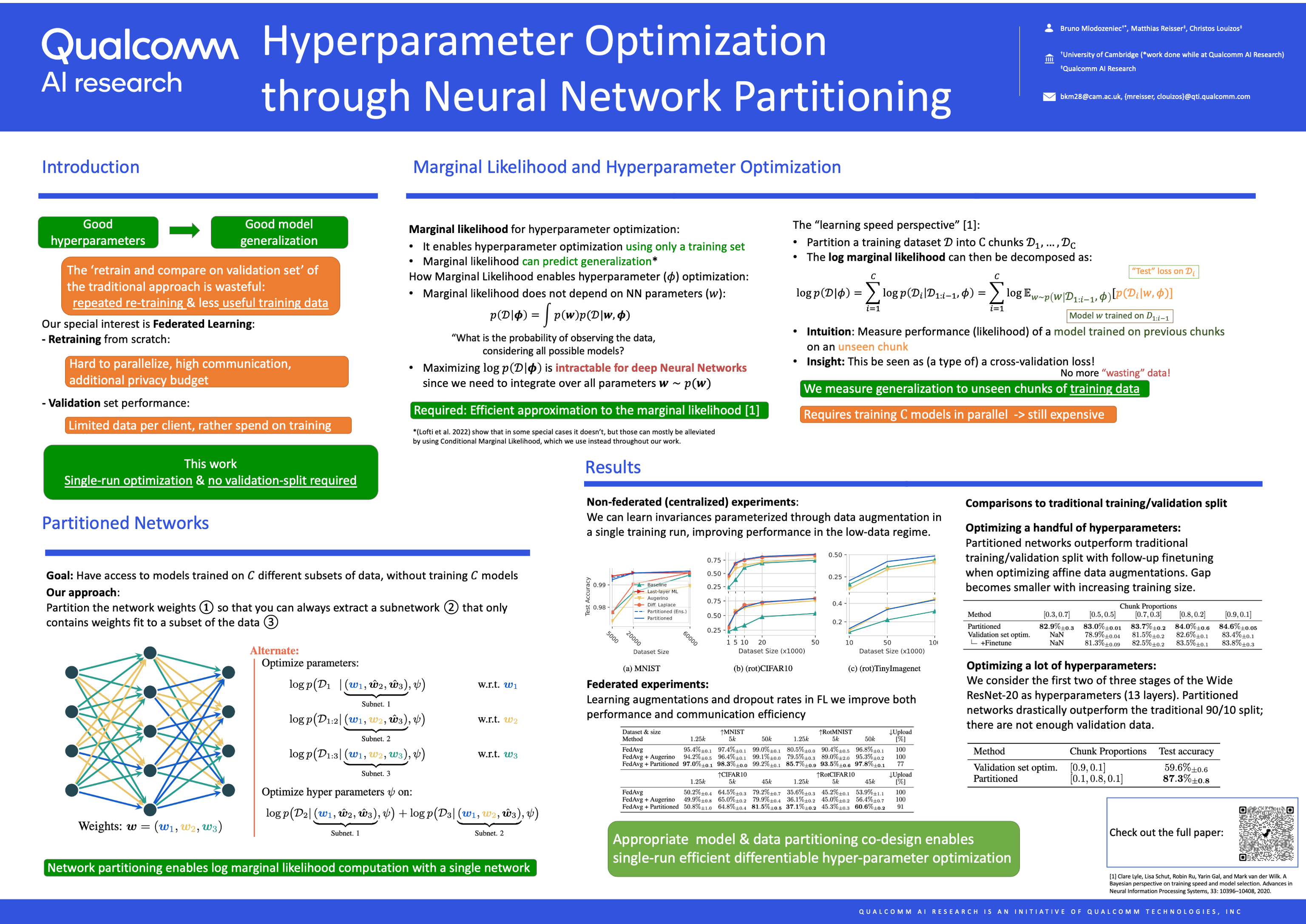

Hyperparameter Optimization Through Neural Network Partitioning Deepai In this work, we propose a simple and efficient way for optimizing hyperparameters inspired by the marginal likelihood, an optimization objective that requires no validation data. our method partitions the training data and a neural network model into k k data shards and parameter partitions, respectively. Keywords: hyperparameter optimization, invariances, data augmentation, marginal likelihood, federated learning tl;dr: we introduce partitioned networks and an out of training sample loss for scalable optimization of hyperparameters abstract: well tuned hyperparameters are crucial for obtaining good generalization behavior in neural networks.

Pdf Hyperparameter Optimization Through Neural Network Partitioning Abstract well tuned hyperparameters are crucial for obtaining good generalization behavior in neural networks. they can enforce appropriate inductive biases, regularize the model and improve performance — especially in the presence of limited data. in this work, we propose a simple and efficient way for optimizing hyperparameters inspired by the marginal likelihood, an optimization objective. Another way of optimizing hyperparameters without a validation set is through the canonical view on model selection (and hence hyperparameter optimization) through the bayesian lens; the concept. Bibliographic details on hyperparameter optimization through neural network partitioning. Well tuned hyperparameters are crucial for obtaining good generalization behavior in neural networks. they can enforce appropriate inductive biases, regularize the model and improve performance especially in the presence of limited data. in this work, we propose a simple and efficient way for optimizing hyperparameters inspired by the marginal likelihood, an optimization objective that.

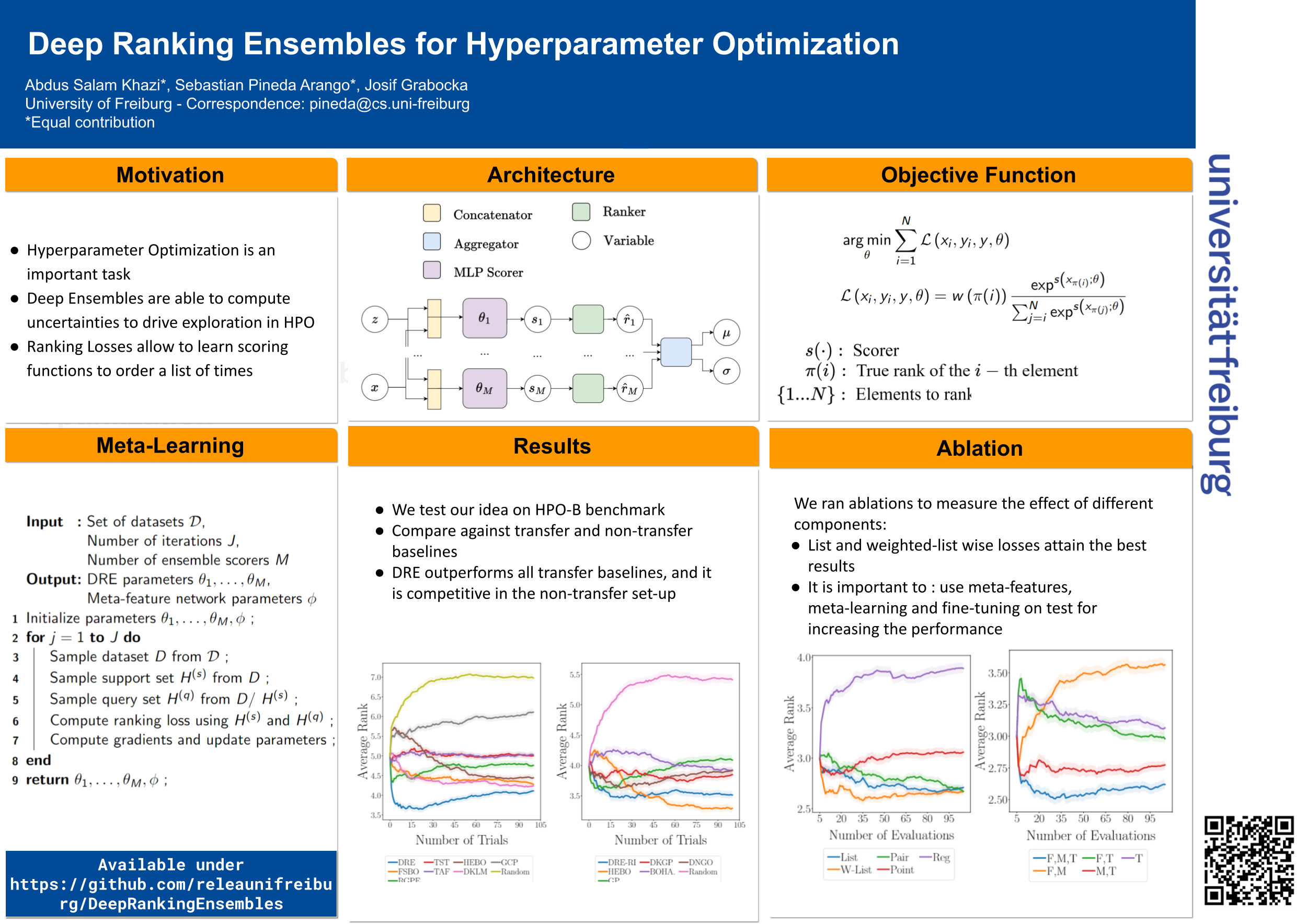

Iclr Poster Deep Ranking Ensembles For Hyperparameter Optimization Bibliographic details on hyperparameter optimization through neural network partitioning. Well tuned hyperparameters are crucial for obtaining good generalization behavior in neural networks. they can enforce appropriate inductive biases, regularize the model and improve performance especially in the presence of limited data. in this work, we propose a simple and efficient way for optimizing hyperparameters inspired by the marginal likelihood, an optimization objective that. Abstract well tuned hyperparameters are crucial for obtaining good generalization behavior in neural networks. they can enforce appropriate inductive biases, regularize the model and improve performance — especially in the presence of limited data. in this work, we propose a simple and eficient way for optimizing hyperparameters inspired by the marginal likelihood, an optimization objective. Tangos: regularizing tabular neural networks through gradient orthogonalization and specialization targeted hyperparameter optimization with lexicographic preferences over multiple objectives.

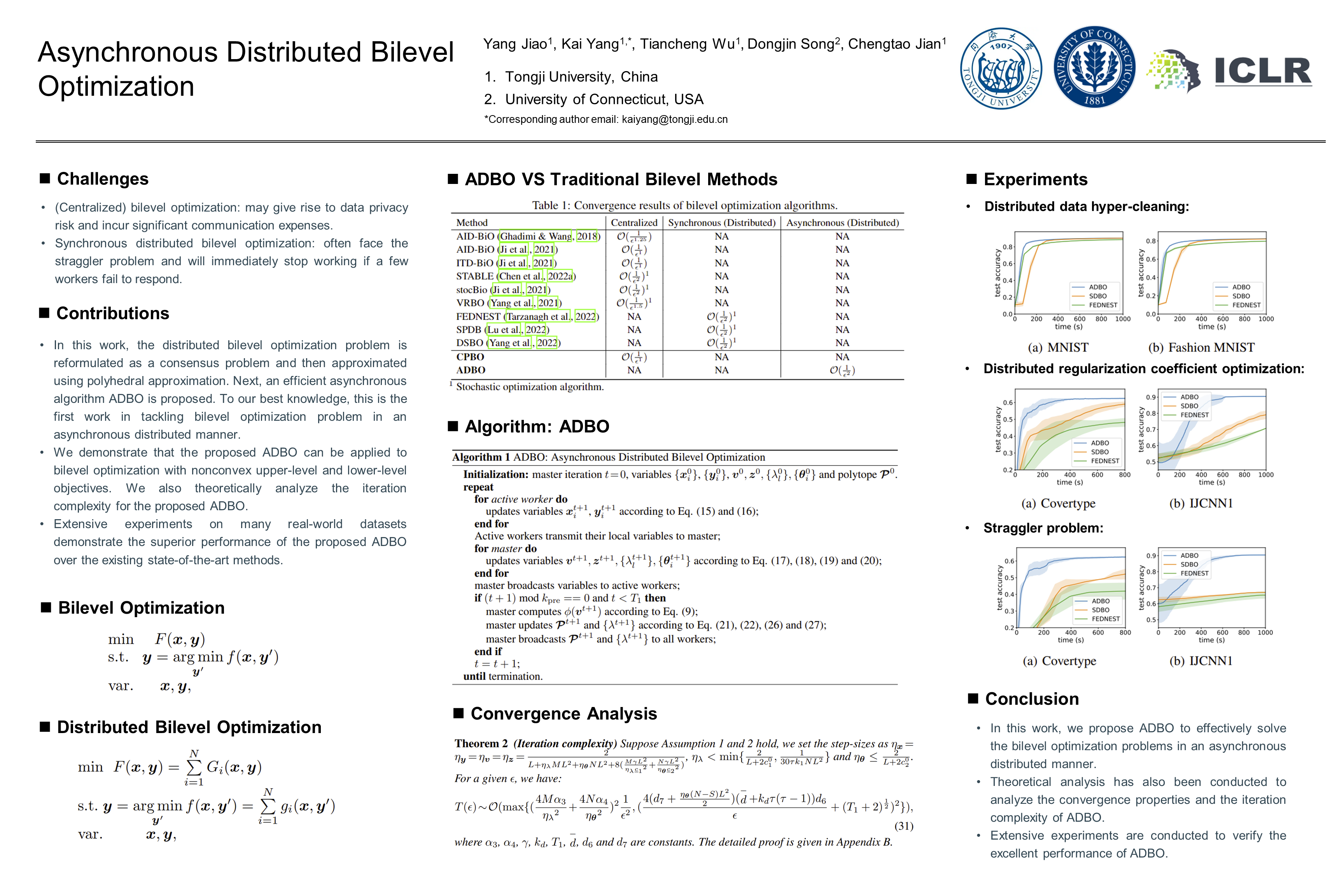

Iclr Poster Asynchronous Distributed Bilevel Optimization Abstract well tuned hyperparameters are crucial for obtaining good generalization behavior in neural networks. they can enforce appropriate inductive biases, regularize the model and improve performance — especially in the presence of limited data. in this work, we propose a simple and eficient way for optimizing hyperparameters inspired by the marginal likelihood, an optimization objective. Tangos: regularizing tabular neural networks through gradient orthogonalization and specialization targeted hyperparameter optimization with lexicographic preferences over multiple objectives.

Iclr Poster Hyperparameter Optimization Through Neural Network Partitioning

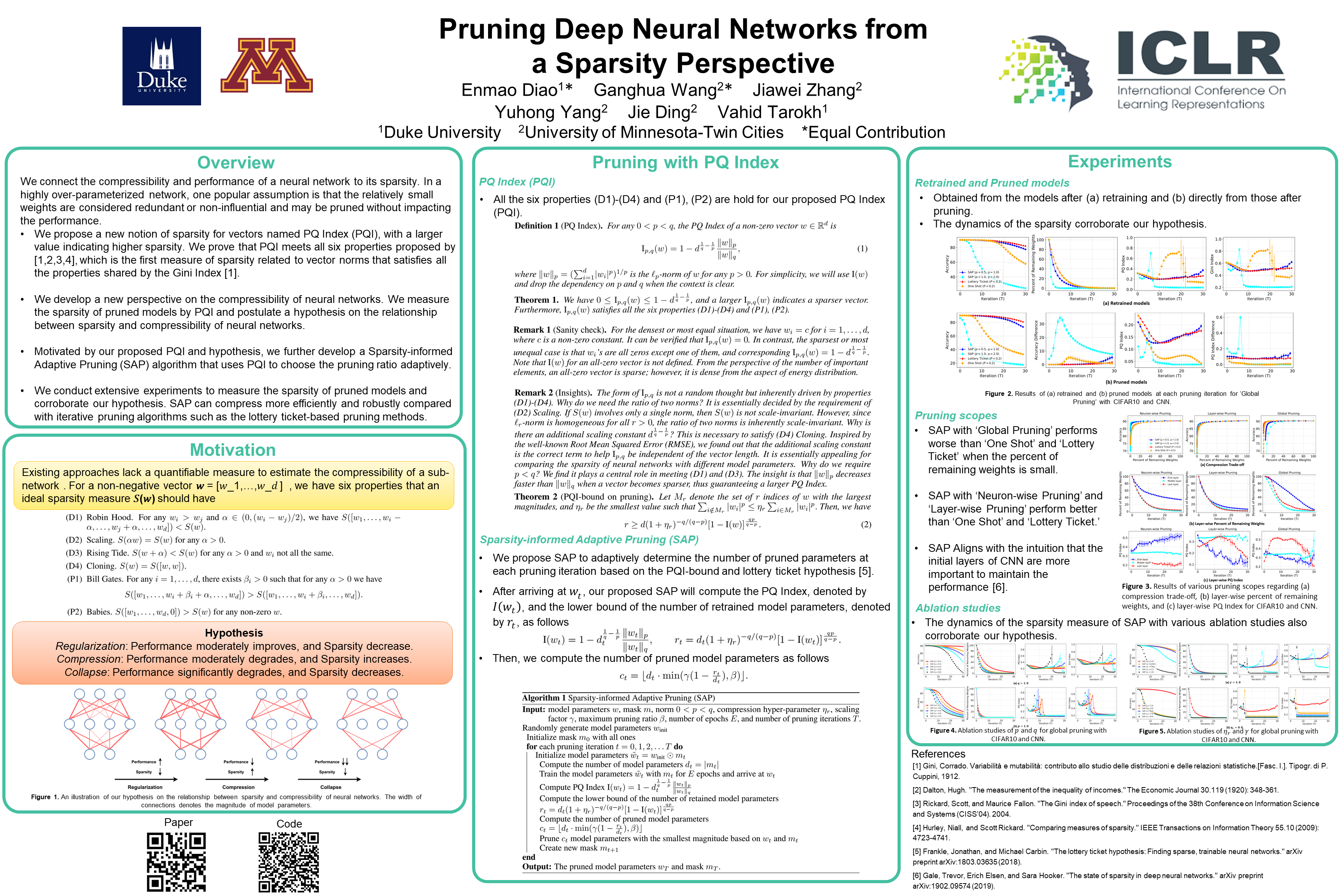

Iclr Poster Pruning Deep Neural Networks From A Sparsity Perspective