Iclr Poster Conditional Positional Encodings For Vision Transformers Virtual presentation poster accept on the performance of temporal difference learning with neural networks haoxing tian · ioannis paschalidis · alex olshevsky keywords: [ reinforcement learning ]. Published: 01 feb 2023, last modified: 25 feb 2023 iclr 2023 poster readers: everyone abstract: neural temporal difference (td) learning is an approximate temporal difference method for policy evaluation that uses a neural network for function approximation. analysis of neural td learning has proven to be challenging.

Iclr Poster An Adaptive Policy To Employ Sharpness Aware Minimization Bibliographic details on on the performance of temporal difference learning with neural networks. Abstract: temporal difference (td) learning is a popular algorithm for policy evaluation in reinforcement learning, but the vanilla td can substantially suffer from the inherent optimization variance. To overcome these limitations, we propose a novel self supervised temporal difference metric learning approach that solves the eikonal equation more accurately and enhances performance in solving complex and unseen planning tasks. Temporal difference (td) learning represents a fascinating paradox: it is the prime example of a divergent algorithm that has not vanished after its instability was proven. on the contrary, td continues to thrive in reinforcement learning (rl), suggesting that it provides significant compensatory benefits.

Iclr Poster Dag Learning On The Permutahedron To overcome these limitations, we propose a novel self supervised temporal difference metric learning approach that solves the eikonal equation more accurately and enhances performance in solving complex and unseen planning tasks. Temporal difference (td) learning represents a fascinating paradox: it is the prime example of a divergent algorithm that has not vanished after its instability was proven. on the contrary, td continues to thrive in reinforcement learning (rl), suggesting that it provides significant compensatory benefits. In this paper, the authors propsoed a new version of variational continual learning (vcl) which combines n step regularization loss with temporal difference. the n step loss considers all posterior and log likelihood before n steps, and the distribution that minimizes the n step loss can cover all n tasks. Poster in workshop: world models: understanding, modelling and scaling temporal difference flows jesse farebrother · matteo pirotta · andrea tirinzoni · remi munos · alessandro lazaric · ahmed touati keywords: [ temporal difference learning ] [ successor measure ] [ flow matching ] [ reinforcement learning ] [ gamma model ] [ geometric horizon model ].

Iclr Poster Causally Aligned Curriculum Learning In this paper, the authors propsoed a new version of variational continual learning (vcl) which combines n step regularization loss with temporal difference. the n step loss considers all posterior and log likelihood before n steps, and the distribution that minimizes the n step loss can cover all n tasks. Poster in workshop: world models: understanding, modelling and scaling temporal difference flows jesse farebrother · matteo pirotta · andrea tirinzoni · remi munos · alessandro lazaric · ahmed touati keywords: [ temporal difference learning ] [ successor measure ] [ flow matching ] [ reinforcement learning ] [ gamma model ] [ geometric horizon model ].

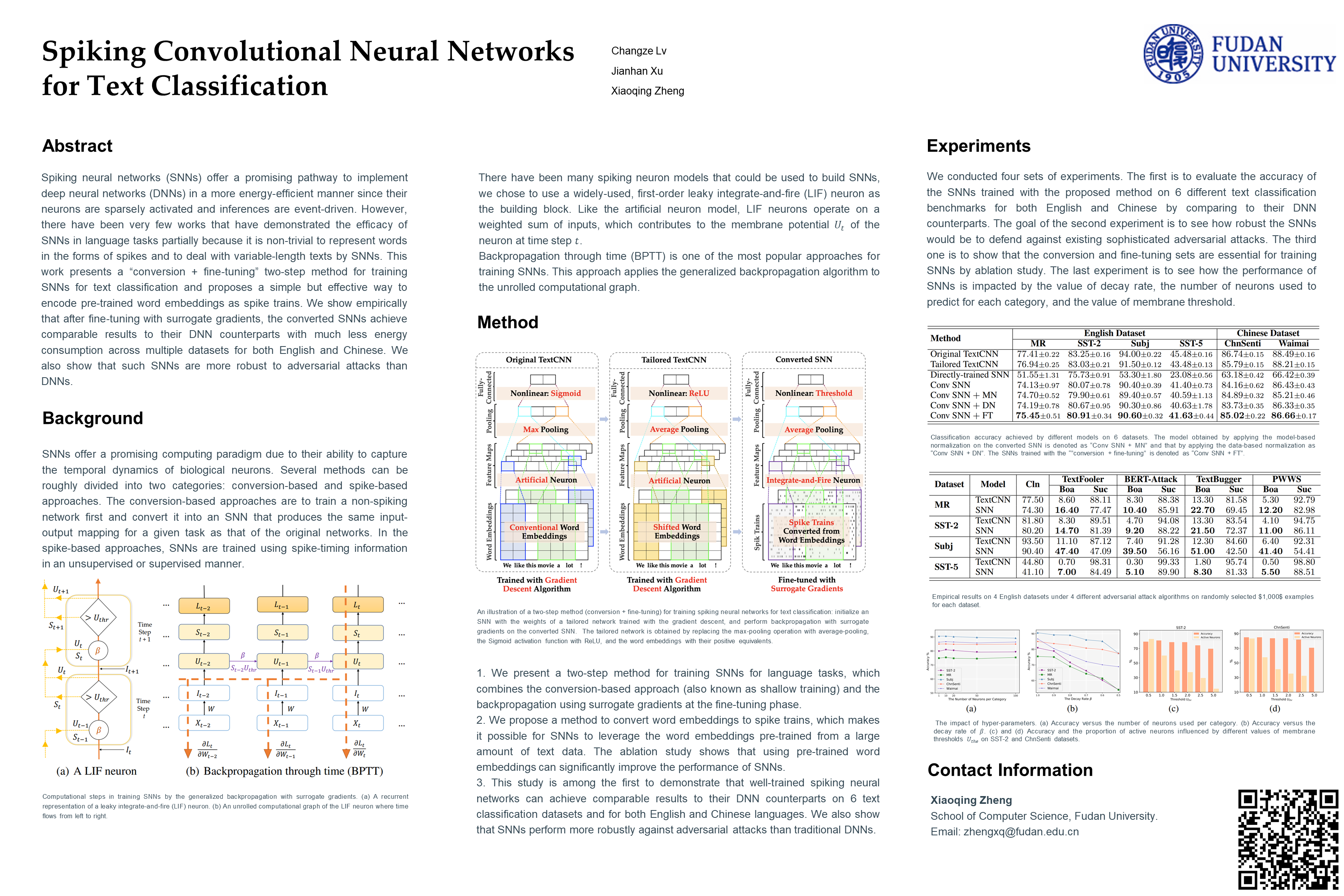

Iclr Poster Spiking Convolutional Neural Networks For Text Classification