Stream Processing Ksqldb Documentation That's correct, a new stream and kafka topic (more than one topic, actually) will be created. what you're doing is a common scenario, and windowing is how you accomplish it. the only caveat is there's not an easy way to to do the "1000 events or 5 minutes, whichever comes first" you could do a 5 minute hopping sliding window, but then you need to count the events as you aggregate (which. @user213546 did you ever find a solution or any further information regarding this?.

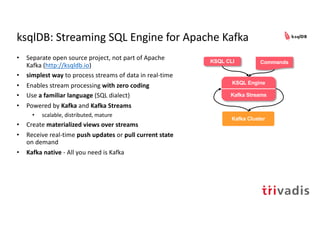

Sql Ppt Pdf Information Technology Management Information Retrieval How to select and deserialize header value in ksqldb? asked 1 year, 7 months ago modified 1 year, 7 months ago viewed 345 times. I have two topics users (contains all the users info) and transactions (contains all the transactions made by the users it includes the 'sender' and 'receiver id'), all of my topics data are nested. This is why the ksqldb table seems like a good option since we can get the latest state of data in the format needed and then just update based off of events from the stream. kafka stream a is essentially a cdc stream off of a mysql table that has been enriched with other data. I'm running 3 ksql servers on google's k8s towards kafka (via ssl) and zookeeper hosted on google cloud vm's. i can easily create 5 streams and they work perfectly, but everything beyond that gives.

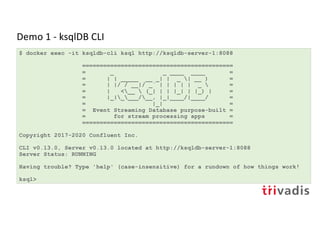

Ksqldb Stream Processing Simplified Ppt This is why the ksqldb table seems like a good option since we can get the latest state of data in the format needed and then just update based off of events from the stream. kafka stream a is essentially a cdc stream off of a mysql table that has been enriched with other data. I'm running 3 ksql servers on google's k8s towards kafka (via ssl) and zookeeper hosted on google cloud vm's. i can easily create 5 streams and they work perfectly, but everything beyond that gives. The answer is to use ksqldb 0.10 (which was literally announced a day later from the question). In ksqldb you can query from the beginning (set 'auto.offset.reset' = 'earliest';) or end of a topic (set 'auto.offset.reset' = 'latest';). you cannot currently (0.8.1 cp 5.5) seek to an arbitrary offset. what you can do is start from the earliest offset and then use rowtime in your predicate to identify messages that match your requirement. select * from my source stream where rowtime.

Ksqldb Stream Processing Simplified Ppt The answer is to use ksqldb 0.10 (which was literally announced a day later from the question). In ksqldb you can query from the beginning (set 'auto.offset.reset' = 'earliest';) or end of a topic (set 'auto.offset.reset' = 'latest';). you cannot currently (0.8.1 cp 5.5) seek to an arbitrary offset. what you can do is start from the earliest offset and then use rowtime in your predicate to identify messages that match your requirement. select * from my source stream where rowtime.