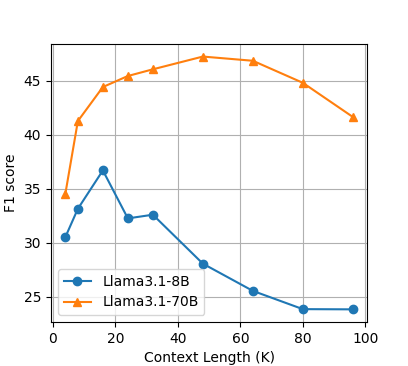

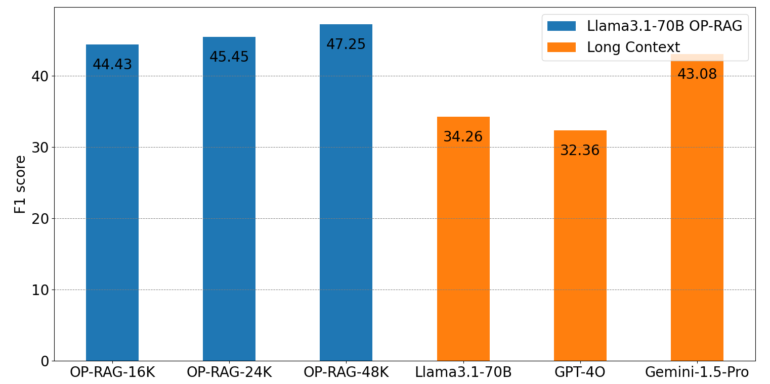

Llms With Large Context Windows Revelry Blog series by software expert chris stansbury exploring the limits of large language models llms with respect to memory overhead and context windows. Recent developments in llms show a trend toward longer context windows, with the input token count of the latest models reaching the millions. because these models achieve near perfect scores on widely adopted benchmarks like needle in a haystack (niah) [1], it’s often assumed that their performance is uniform across long context tasks.

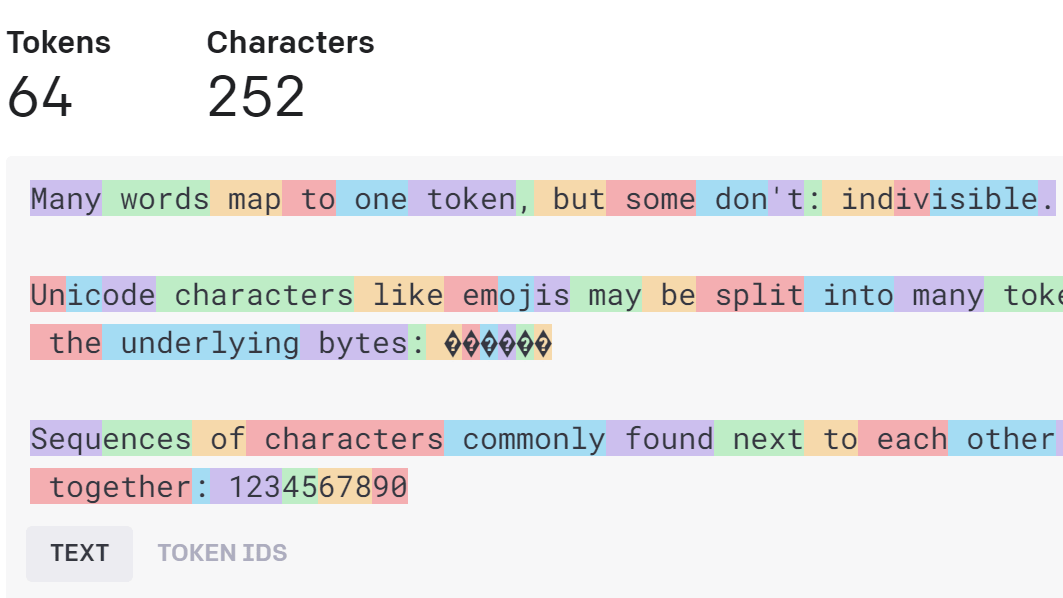

Study Questions Benefits Of Llms Large Context Windows â Meta Ai Labsâ Learn what an llm context window is, why it matters, and how to work around its limitations. discover strategies like truncation and…. How to manage costs for large context llms? use adaptive context windows: instead of always using the maximum context window, some systems adapt the window size to the input length, reducing costs when smaller contexts suffice. In large language models (llms), understanding the concepts of tokens and context windows is essential to comprehend how these models process and generate language. what are tokens? in the context of llms, a token is a basic unit of text that the model processes. a token can represent various components of language, including:. The post provides an introduction to large language models (llms), their context and tokens, and discusses their limitations in terms of memory overhead and context windows. it also explains the process of how these models work, the concept of context window, and the challenges faced due to memory constraints.

Study Questions Benefits Of Llms Large Context Windows â Meta Ai Labsâ In large language models (llms), understanding the concepts of tokens and context windows is essential to comprehend how these models process and generate language. what are tokens? in the context of llms, a token is a basic unit of text that the model processes. a token can represent various components of language, including:. The post provides an introduction to large language models (llms), their context and tokens, and discusses their limitations in terms of memory overhead and context windows. it also explains the process of how these models work, the concept of context window, and the challenges faced due to memory constraints. Part 1 of 2 in blog series in which we explore the limits of llms with respect to memory overhead and context windows. revelry.co insights artificial intelligence llms large context windows. Forgetful ai? not anymore! dive into open source llms with the longest context lengths, revolutionizing how we interact with ai. discover models that remember more, unlock powerful applications, and explore the future of ai communication. click to learn!.

Context Window Llms Datatunnel Part 1 of 2 in blog series in which we explore the limits of llms with respect to memory overhead and context windows. revelry.co insights artificial intelligence llms large context windows. Forgetful ai? not anymore! dive into open source llms with the longest context lengths, revolutionizing how we interact with ai. discover models that remember more, unlock powerful applications, and explore the future of ai communication. click to learn!.

Understanding Llms Context Windows