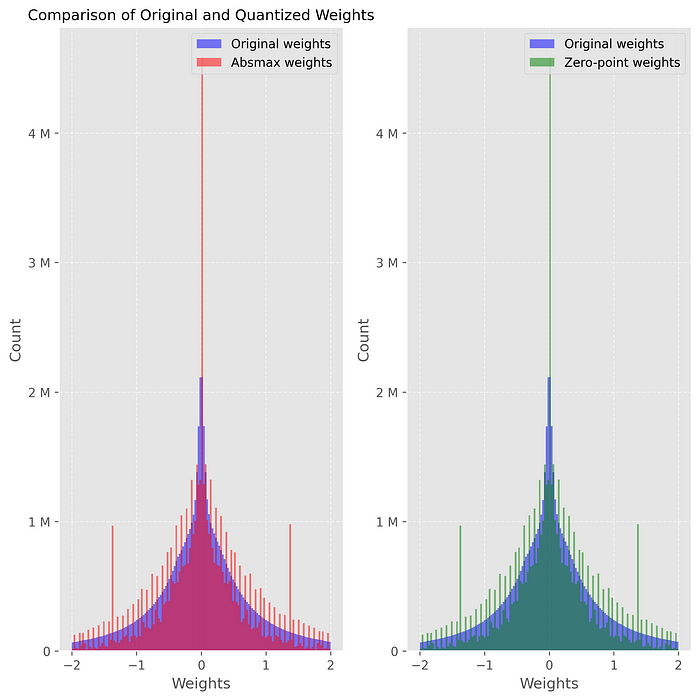

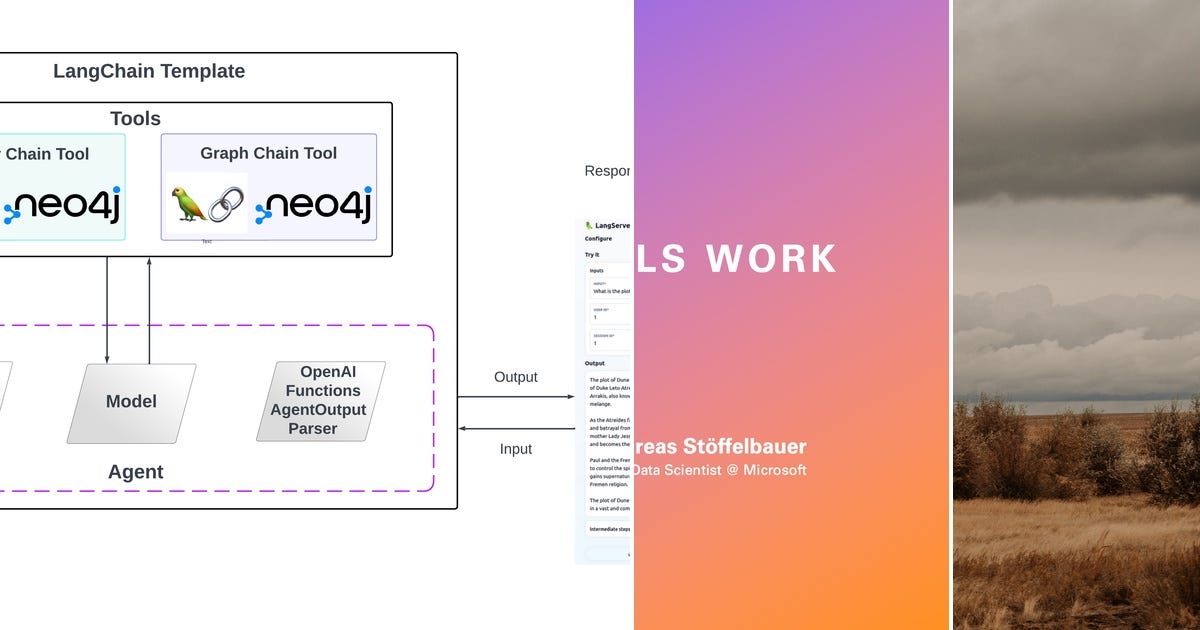

Local Llms Lightweight Llm Using Quantization Reinventedweb What is quantization? we explored how quantization compresses model weights into smaller bit representations, like 8 bit integers, to reduce memory usage without sacrificing too much performance. why do i care about it? understanding the importance of quantization for running large models on hardware with limited memory capacity. Llms on your laptop if your desktop or laptop does not have a gpu installed, one way to run faster inference on llm would be to use llama.cpp. this was originally written so that facebooks llama could be run on laptops with 4 bit quantization.

Llm Model Quantization An Overview However, advancements in ai have led to the development of smaller llms optimized for local use. if you’re looking for the smallest llm to run locally, this guide explores lightweight models that deliver efficient performance without requiring excessive hardware. Before we explore further how to run models, let’s take a closer look at quantization — a key technique that makes local llm execution possible on standard hardware. Contents local llms in practice introduction choosing your model task suitability performance & cost licensing community support customization tools for local llm deployment serving models llama.cpp llamafile ollama comparison ui lm studio jan open webui comparison case study: the effect of quantization on llm performance prompts dataset quantization benchmarking results takeaways conclusion. Recommended quantization based on your hardware, we recommend using int4 quantization for llms. this provides a good balance between accuracy and memory usage.

Ultimate Guide To Llm Quantization For Faster Leaner Ai Models Contents local llms in practice introduction choosing your model task suitability performance & cost licensing community support customization tools for local llm deployment serving models llama.cpp llamafile ollama comparison ui lm studio jan open webui comparison case study: the effect of quantization on llm performance prompts dataset quantization benchmarking results takeaways conclusion. Recommended quantization based on your hardware, we recommend using int4 quantization for llms. this provides a good balance between accuracy and memory usage. Hey there! i am sure you have probably heard of #minstral & #llama llms by now. they are really good, competing with the likes of chatgpt4. but have you ever…. Local llms: lightweight llm using quantization run big & heavy llms on your lower end systems with quantization python llm.

Llm Quantization Review Hey there! i am sure you have probably heard of #minstral & #llama llms by now. they are really good, competing with the likes of chatgpt4. but have you ever…. Local llms: lightweight llm using quantization run big & heavy llms on your lower end systems with quantization python llm.

Naive Quantization Methods For Llms A Hands On

List Quantization On Llms Curated By Majid Shaalan Medium

A Guide To Quantization In Llms Symbl Ai