Gpu Vs Cpu Unleashing Computing Power In this post we present a solution that allows organisations with existing cuda projects to assess performance loss (or gain) for transitioning from gpu to modern cpu systems. using real life cuda examples, we demonstrate how existing gpu only code can be adopted to run on cpu or gpu at the same time. March, 2025 speeding up quantlib with matlogica’s aadc library by maksim kozyarchuk in this blog post, maksim explores how matlogica’s adc supercharges quantlib, dramatically accelerating pricing and risk calculations without requiring a rewrite. by leveraging cutting edge compiler techniques and automatic differentiation, this integration unlocks unprecedented performance gains for.

Cpu Vs Gpu For Machine Learning Pure Storage Blog Summary: this is a blog post i made for helping new pc builders understand the roles and importance of the cpu and gpu, and how they work together for gaming performance. sharing the full text here cause as a long time redditor on discussion heavy subs i feel weird just sharing links. intro in the world of computer hardware, two components often take center stage when it comes to gaming. Aadc utilizes native cpu vectorization and multi threading, delivering performance comparable to a gpu. this results in faster computing of the model and its first and higher order derivatives. the approach is particularly useful for models with many parameters that require frequent gradient updates during training. However, in finance, division and square root calculations are common! so, gpu requires >26x more clocks than cpu for division, and almost 11x more for a square root calculation!. Whilst matlogica’s solution is mainly tuned for finance applications, it is notable that these code generation kernels have a very broad range of potential applications across machine learning. for tasks like training deep neural networks and optimising complex algorithms, the gpu like performance of these kernels is a formidable enabler.

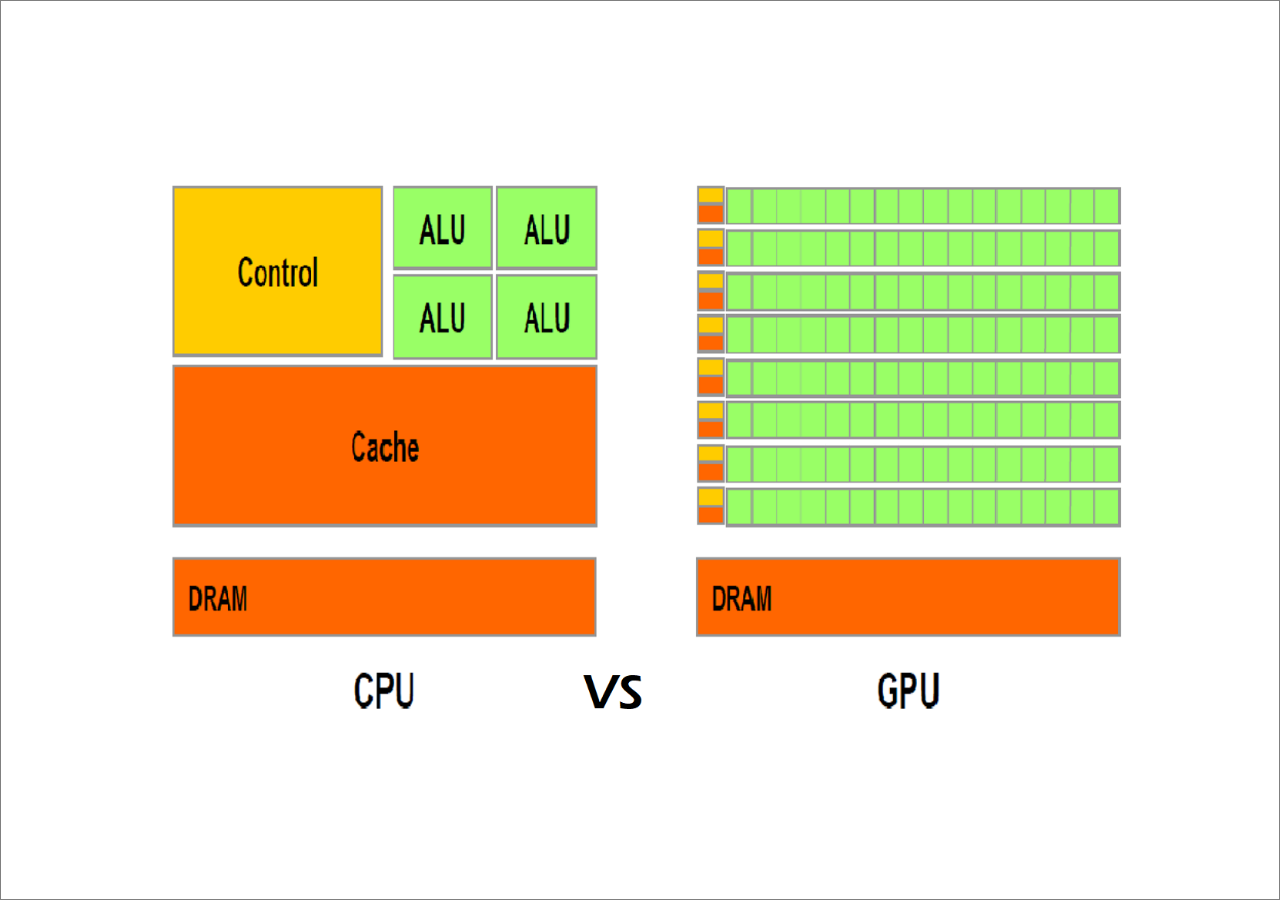

Issues Rbga Cpu Vs Gpu Matrix Operation Github However, in finance, division and square root calculations are common! so, gpu requires >26x more clocks than cpu for division, and almost 11x more for a square root calculation!. Whilst matlogica’s solution is mainly tuned for finance applications, it is notable that these code generation kernels have a very broad range of potential applications across machine learning. for tasks like training deep neural networks and optimising complex algorithms, the gpu like performance of these kernels is a formidable enabler. Cpu (central processing unit) what is a gpu? the graphics processing unit (gpu) is designed for parallel processing and it uses dedicated memory known as vram (video ram). they are designed to tackle thousands of operations at once for tasks like rendering images, 3d rendering, processing video, and running machine learning models. Our library is cross platform for supported processor architectures. we are also developing support for graphics card computing (gpu), which will allow customers to use graphics cards without needing to resort to low level interfaces such as cuda or oneapi.

Cpu Vs Gpu What Is The Difference Ibe Electronics Cpu (central processing unit) what is a gpu? the graphics processing unit (gpu) is designed for parallel processing and it uses dedicated memory known as vram (video ram). they are designed to tackle thousands of operations at once for tasks like rendering images, 3d rendering, processing video, and running machine learning models. Our library is cross platform for supported processor architectures. we are also developing support for graphics card computing (gpu), which will allow customers to use graphics cards without needing to resort to low level interfaces such as cuda or oneapi.

Cpu And Gpu Differences And Use Cases Stackscale

Cpu Vs Gpu In Machine Learning Peerdh

Comparison Of Cpu Vs Gpu Architecture Download Scientific Diagram