Naive Quantization Methods For Llms A Hands On By shrinivasan sankar — mar 15, 2024 naive quantization methods for llms — a hands on llms today are quite large and the size of these llms both in terms of memory and computation is only increasing. at the same time, demand for running the llms on small devices is equally increasing. Large language models (llms) require substantial compute, and thus energy, at inference time. while quantizing weights and activations is effective at improving efficiency, naive quantization of llms can significantly degrade performance due to large magnitude outliers. this paper describes fptquant, which introduces four novel, lightweight, and expressive function preserving transforms (fpts.

Naive Quantization Methods For Llms A Hands On Here’s an overview of the key quantization methods used in llms: 1. post training quantization (ptq) definition and application: ptq is used once the model has been thoroughly trained. it transforms model weights and possibly activations from high precision floating point numbers to lower precision representations such as 16 bit or 8 bit integers. impact on model quality: ptq can improve. The bitsandbytes library has emerged as a prominent tool, simplifying the implementation of advanced quantization techniques for pytorch models, particularly llms. this guide provides a walkthrough of how to use bitsandbytes for quantizing llms, covering both 8 bit and 4 bit methods. A guide on how to perform (int8 and int4) quantization on an llm (intel neural chat 7b model) with weight only quantization (woq) technique. Two common naive quantization techniques implemented from scratch in pytorch, the outlier problem that breaks them, how bitsandbytes’ llm.int8() solves it with a smart mixed precision approach.

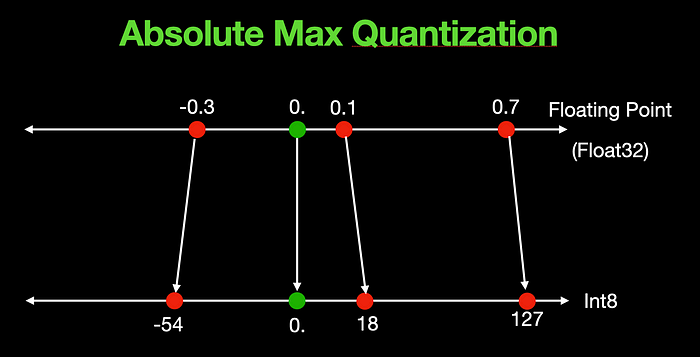

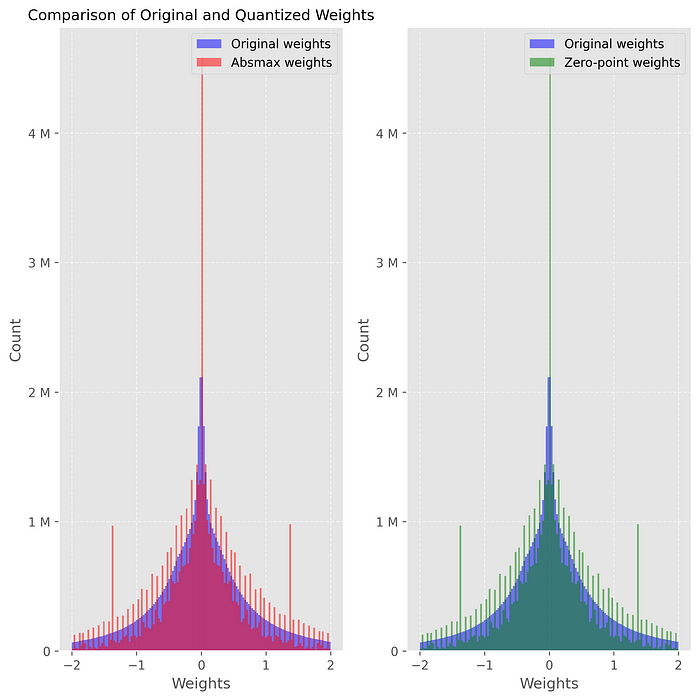

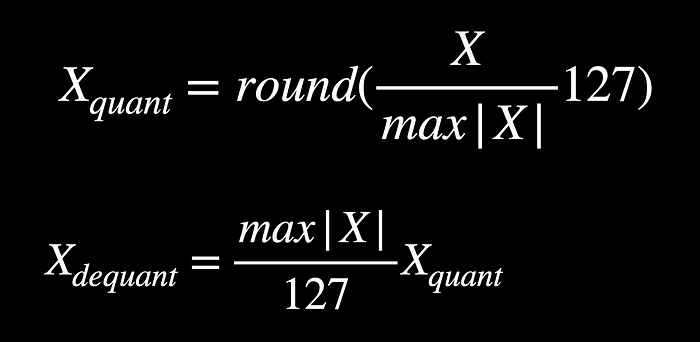

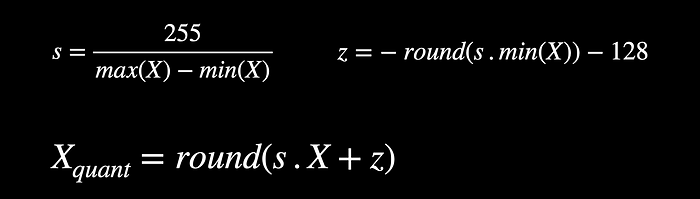

Naive Quantization Methods For Llms A Hands On A guide on how to perform (int8 and int4) quantization on an llm (intel neural chat 7b model) with weight only quantization (woq) technique. Two common naive quantization techniques implemented from scratch in pytorch, the outlier problem that breaks them, how bitsandbytes’ llm.int8() solves it with a smart mixed precision approach. Summary large language models (llms) are powerful, but their size can lead to slow inference speeds and high memory consumption, hindering real world deployment. quantization, a technique that reduces the precision of model weights, offers a powerful solution. this post will explore how to use quantization techniques like bitsandbytes, autogptq, and autoround to dramatically improve llm. One of my previous videos introduced the fundamentals of quantization. this video is about hands on quantization of a small llm model with absolute max and zero point quantization methods.

Naive Quantization Methods For Llms A Hands On Summary large language models (llms) are powerful, but their size can lead to slow inference speeds and high memory consumption, hindering real world deployment. quantization, a technique that reduces the precision of model weights, offers a powerful solution. this post will explore how to use quantization techniques like bitsandbytes, autogptq, and autoround to dramatically improve llm. One of my previous videos introduced the fundamentals of quantization. this video is about hands on quantization of a small llm model with absolute max and zero point quantization methods.

Naive Quantization Methods For Llms A Hands On

Local Llms Lightweight Llm Using Quantization Reinventedweb

Quantization Llms 1 Quantization Ipynb At Main Khushvind