Matt Williams On Linkedin Optimize Your Ai Quantization Explained 🚀 run massive ai models on your laptop! learn the secrets of llm quantization and how q2, q4, and q8 settings in ollama can save you hundreds in hardware co. Quantization is a crucial technique in the realm of artificial intelligence (ai) and machine learning (ml). it plays a vital role in optimizing ai models for deployment, particularly on edge devices where computational resources and power consumption are limited. this article delves into the concept of quantization, exploring its different types, including lora and qlora, and their respective.

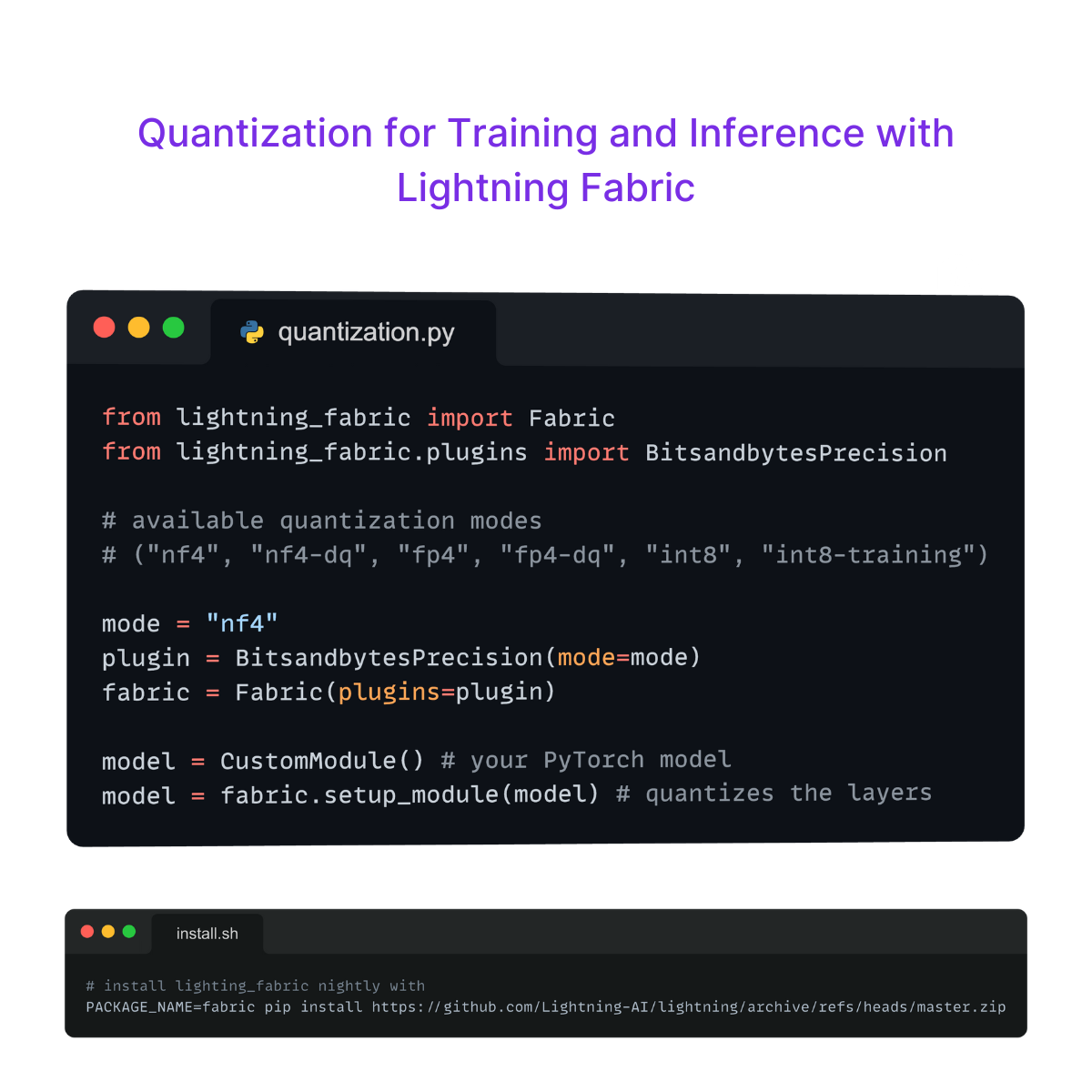

Unify Quantization A Bit Can Go A Long Way Explore model quantization to boost the efficiency of your ai models! this guide discusses benefits and limitations with a hands on example. In conclusion, quantization is a powerful technique for optimizing ai models. it reduces resource consumption while maintaining acceptable accuracy, making ai more accessible and efficient across. Running a language model with the same quality of chatgpt within your own company requires expensive hardware. but don't worry, there is an optimization: quantization can drastically reduce the hardware requirements of llms (up to 80%). in this post, i'll explain how it works. Quantization is an optimization technique aimed at reducing the computational load and memory footprint of neural networks without significantly impacting model accuracy. it involves converting a model’s high precision floating point numbers into lower precision representations such as integers, which results in faster inference times, lower energy consumption, and reduced storage.

Ai Quantization Explained With Alex Mead Faster Smaller Models Ai Running a language model with the same quality of chatgpt within your own company requires expensive hardware. but don't worry, there is an optimization: quantization can drastically reduce the hardware requirements of llms (up to 80%). in this post, i'll explain how it works. Quantization is an optimization technique aimed at reducing the computational load and memory footprint of neural networks without significantly impacting model accuracy. it involves converting a model’s high precision floating point numbers into lower precision representations such as integers, which results in faster inference times, lower energy consumption, and reduced storage. With the rapid evolution of ai technologies, understanding how quantization works —and how it affects your model’s performance —is more crucial than ever. in today’s fast paced digital world, every millisecond counts. The video explains quantization in ai models, highlighting how it enables large models to run on basic hardware by reducing parameter precision and memory requirements through levels like q2, q4, and q8. it also introduces context quantization to optimize memory usage for conversation history, demonstrating significant memory savings and encouraging users to experiment with different.

Quantization In Depth Deeplearning Ai With the rapid evolution of ai technologies, understanding how quantization works —and how it affects your model’s performance —is more crucial than ever. in today’s fast paced digital world, every millisecond counts. The video explains quantization in ai models, highlighting how it enables large models to run on basic hardware by reducing parameter precision and memory requirements through levels like q2, q4, and q8. it also introduces context quantization to optimize memory usage for conversation history, demonstrating significant memory savings and encouraging users to experiment with different.

What Is Quantization Lightning Ai

What Is Quantization Artificial Intelligence Explained Chatgptguide Ai