Optimizing Llms Through Quantization A Hands On Tutorial This repository contains resources and code for "optimizing llms through quantization: a hands on tutorial", a session part of the analytics vidhya datahour series. the focus of the tutorial is to explore the quantization of large language models (llms) to enhance deployment efficiency while maintaining model accuracy. Learn llm quantization to boost efficiency and reduce computational demands. explore ptq and qat through hands on jupyter notebooks, optimizing models without sacrificing accuracy. join this webinar for practical insights and advanced techniques!.

Local Llms Lightweight Llm Using Quantization Reinventedweb Optimizing llm inference through quantization is a powerful strategy that can dramatically enhance performance while slightly reducing accuracy. Explore model quantization to boost the efficiency of your ai models! this guide discusses benefits and limitations with a hands on example. What’s next? if you enjoyed this post, check out my other deep dives into llm optimization, quantization, and model efficiency on my medium profile. Gain insights into fine tuning llms with lora and qlora. explore parameter efficient methods, llm quantization, and hands on exercises to adapt ai models with minimal resources efficiently.

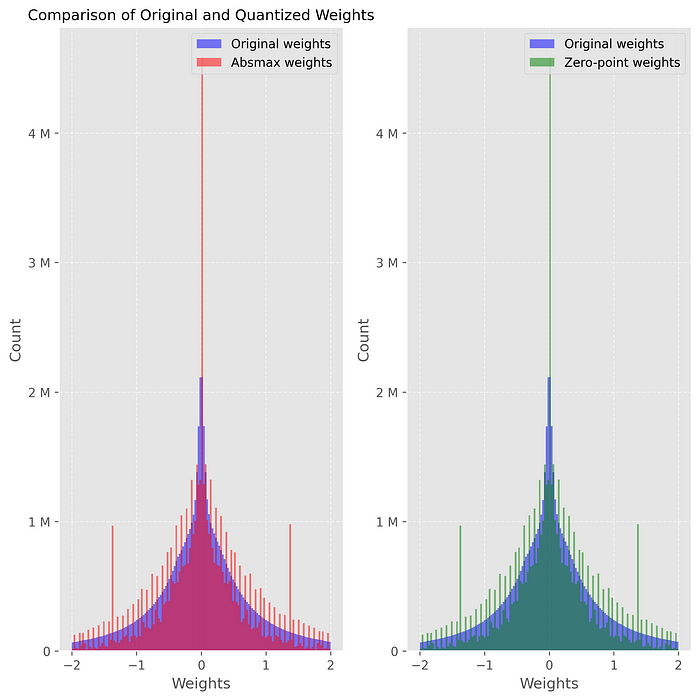

Naive Quantization Methods For Llms A Hands On What’s next? if you enjoyed this post, check out my other deep dives into llm optimization, quantization, and model efficiency on my medium profile. Gain insights into fine tuning llms with lora and qlora. explore parameter efficient methods, llm quantization, and hands on exercises to adapt ai models with minimal resources efficiently. Llms text generation generation strategies generation features prompt engineering optimizing inference caching kv cache strategies serving getting the most out of llms perplexity of fixed length models chat with models optimization training quantization export to production. As we move toward edge deployment, optimizing llm size becomes crucial without compromising performance or quality. one effective method to achieve this optimization is through quantization. in this article, we will deeply explore quantization and some state of the art quantization methods. we will also see how to use them. table of contents.

Naive Quantization Methods For Llms A Hands On Llms text generation generation strategies generation features prompt engineering optimizing inference caching kv cache strategies serving getting the most out of llms perplexity of fixed length models chat with models optimization training quantization export to production. As we move toward edge deployment, optimizing llm size becomes crucial without compromising performance or quality. one effective method to achieve this optimization is through quantization. in this article, we will deeply explore quantization and some state of the art quantization methods. we will also see how to use them. table of contents.

List Quantization On Llms Curated By Majid Shaalan Medium