Pyspark Vs Pandas Vs Sql Syntax Introduction Inside Databrick Pyspark: explode json in column to multiple columns asked 7 years, 1 month ago modified 4 months ago viewed 87k times. I have a pyspark dataframe consisting of one column, called json, where each row is a unicode string of json. i'd like to parse each row and return a new dataframe where each row is the parsed json.

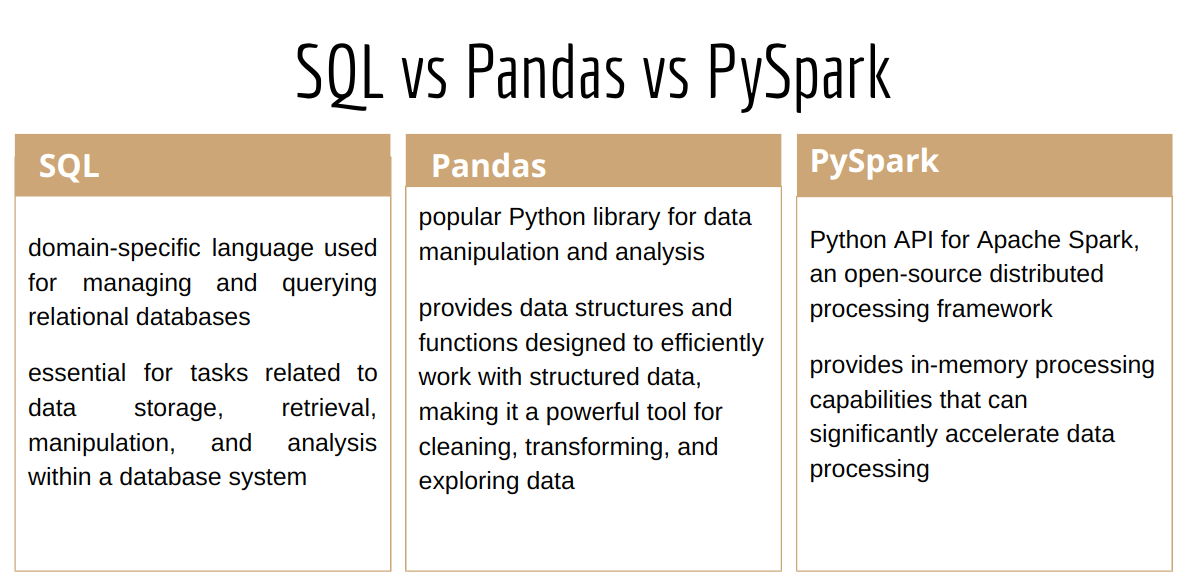

Comparison Sql Vs Pandas Vs Pyspark I am trying to filter a dataframe in pyspark using a list. i want to either filter based on the list or include only those records with a value in the list. my code below does not work: # define a. Python apache spark pyspark apache spark sql edited dec 10, 2017 at 1:43 community bot 1 1. 105 pyspark.sql.functions.when takes a boolean column as its condition. when using pyspark, it's often useful to think "column expression" when you read "column". logical operations on pyspark columns use the bitwise operators: & for and | for or ~ for not when combining these with comparison operators such as <, parenthesis are often needed. Datetime range filter in pyspark sql asked 10 years ago modified 5 years, 8 months ago viewed 129k times.

Pyspark Vs Pandas Vs Sql Get The Columns And Datatypes 02 105 pyspark.sql.functions.when takes a boolean column as its condition. when using pyspark, it's often useful to think "column expression" when you read "column". logical operations on pyspark columns use the bitwise operators: & for and | for or ~ for not when combining these with comparison operators such as <, parenthesis are often needed. Datetime range filter in pyspark sql asked 10 years ago modified 5 years, 8 months ago viewed 129k times. 4 on pyspark, you can also use this bool(df.head(1)) to obtain a true of false value it returns false if the dataframe contains no rows. With pyspark dataframe, how do you do the equivalent of pandas df['col'].unique(). i want to list out all the unique values in a pyspark dataframe column. not the sql type way (registertemplate the.

Pyspark Vs Pandas Vs Sql Read A File 01 By Yayinirathinam Mar 2024 4 on pyspark, you can also use this bool(df.head(1)) to obtain a true of false value it returns false if the dataframe contains no rows. With pyspark dataframe, how do you do the equivalent of pandas df['col'].unique(). i want to list out all the unique values in a pyspark dataframe column. not the sql type way (registertemplate the.

Basic Syntax In Sql Pandas Pyspark By Bysanitribhuvan Medium

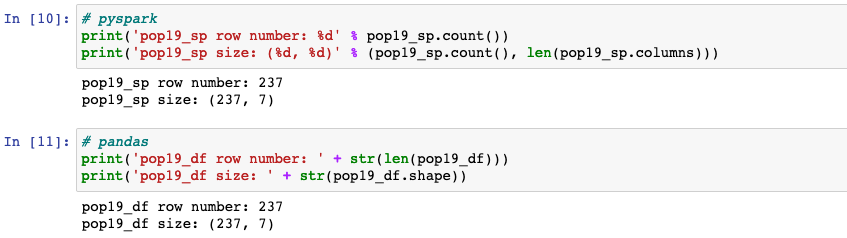

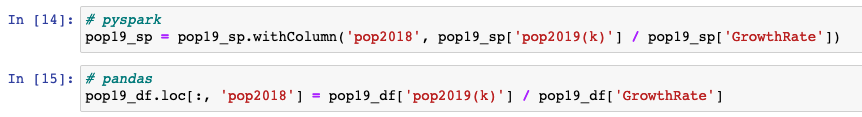

Data Transformation With Pandas Vs Pyspark Jingwen Zheng

Data Transformation With Pandas Vs Pyspark Jingwen Zheng