Qlora Efficient Finetuning Of Quantized Llms Insights For Artificial We present qlora, an efficient finetuning approach that reduces memory usage enough to finetune a 65b parameter model on a single 48gb gpu while preserving full 16 bit finetuning task performance. qlora backpropagates gradients through a frozen, 4 bit quantized pretrained language model into low rank adapters~(lora). our best model family, which we name guanaco, outperforms all previous openly. Abstract we present qlora, an efficient finetuning approach that reduces memory usage enough to finetune a 65b parameter model on a single 48gb gpu while preserving full 16 bit finetuning task performance. qlora backpropagates gradients through a frozen, 4 bit quantized pretrained language model into low rank adapters~ (lora).

Qlora Efficient Finetuning Of Quantized Llms Bens Bites News Abstract we present qlora, an efficient finetuning approach that reduces memory usage enough to finetune a 65b parameter model on a single 48gb gpu while preserving full 16 bit finetuning task performance. qlora backpropagates gradients through a frozen, 4 bit quantized pretrained language model into low rank adapters (lora). Conclusion qlora presents an efficient finetuning approach for quantized language models, reducing memory usage without sacrificing task performance. by leveraging techniques such as 4 bit normalfloat, double quantization, and paged optimizers, qlora enables the finetuning of large scale models on limited computational resources. Resources lora: low rank adaptation of large language models qlora: efficient finetuning of quantized llms prefix tuning: optimizing continuous prompts for generation all images are by the author unless noted otherwise. Finetuning is an important process for improving the performance of large language models (llms) and customizing their behavior for specific tasks. however, finetuning very large models can be extremely expensive due to the large amounts of memory needed. researchers from the university of washington have developed a new solution called qlora (quantized low rank adapters) to address this.

Qlora Efficient Finetuning Of Quantized Llms Deepai Resources lora: low rank adaptation of large language models qlora: efficient finetuning of quantized llms prefix tuning: optimizing continuous prompts for generation all images are by the author unless noted otherwise. Finetuning is an important process for improving the performance of large language models (llms) and customizing their behavior for specific tasks. however, finetuning very large models can be extremely expensive due to the large amounts of memory needed. researchers from the university of washington have developed a new solution called qlora (quantized low rank adapters) to address this. Home writing today i learned qlora: efficient finetuning of quantized llms 16 oct, 2023 introduction previously, we discussed low rank adapters (lora) as a method for efficiently fine tuning large language models (llms). in this post, we will discuss qlora, a new quantization method that builds on lora to enable even more efficient llm fine tuning. qlora reduces the memory requirements for. The qlora finetuning process is a step forward in efficiently tuning large language models, introducing innovative techniques to address the traditionally high memory requirements and performance trade offs of finetuning quantized models.

Qlora Efficient Finetuning Of Quantized Llms Cloudbooklet Home writing today i learned qlora: efficient finetuning of quantized llms 16 oct, 2023 introduction previously, we discussed low rank adapters (lora) as a method for efficiently fine tuning large language models (llms). in this post, we will discuss qlora, a new quantization method that builds on lora to enable even more efficient llm fine tuning. qlora reduces the memory requirements for. The qlora finetuning process is a step forward in efficiently tuning large language models, introducing innovative techniques to address the traditionally high memory requirements and performance trade offs of finetuning quantized models.

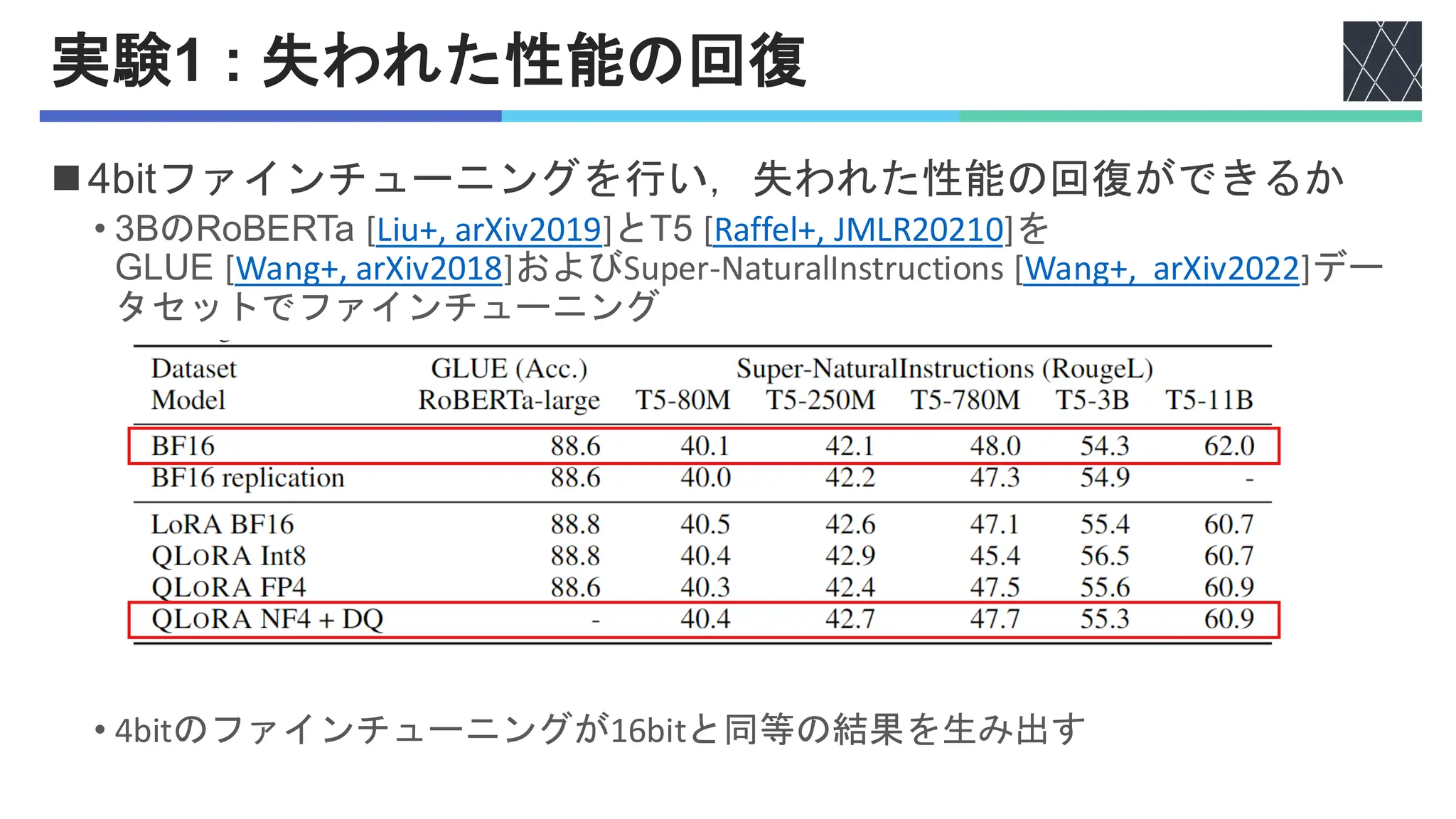

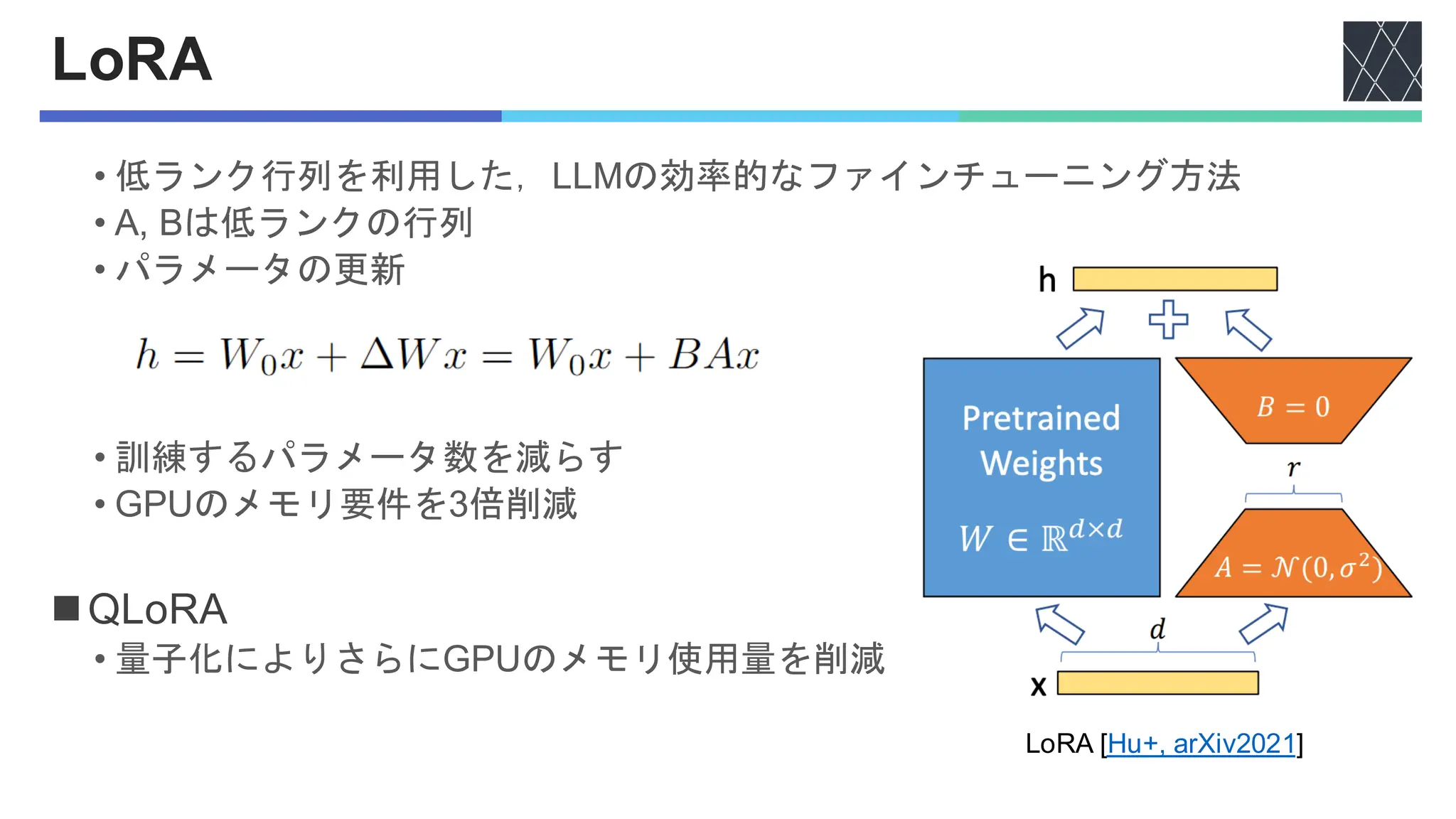

論文紹介 Qlora Efficient Finetuning Of Quantized Llms Pdf

論文紹介 Qlora Efficient Finetuning Of Quantized Llms Pdf