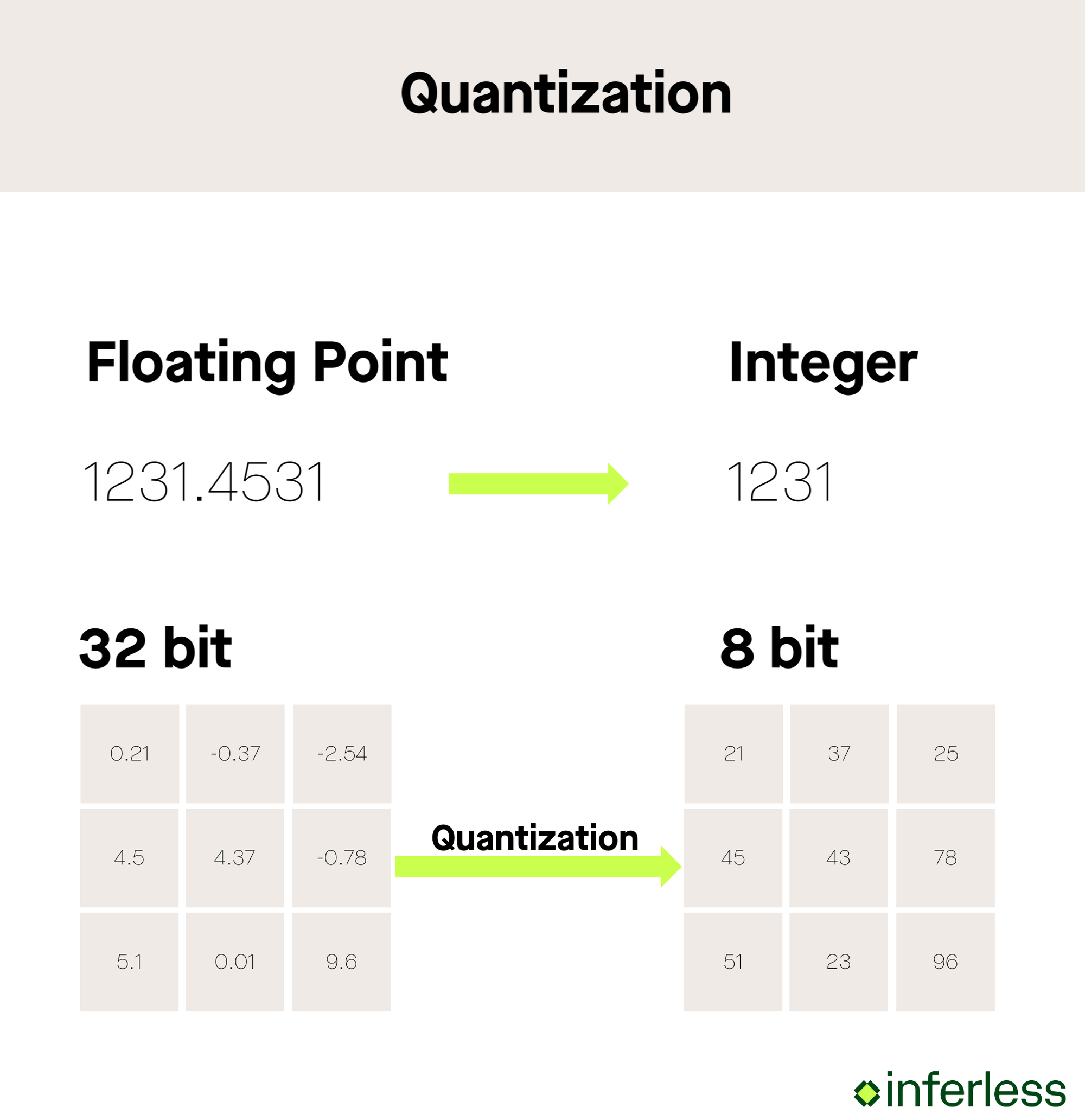

Quantization Of Large Language Models Llms A Deep Dive Large language models (llms) have been extensively researched and used in both academia and industry since the rise in popularity of the transformer model, which demonstrates excellent performance in ai. however, the computational demands of llms are immense, and the energy resources required to run them are often limited. for instance, popular models like gpt 3, with 175 billion parameters. Quantization in large language models (llms) involves reducing the precision of a model’s calculations to enhance efficiency, especially for deployment on limited hardware.

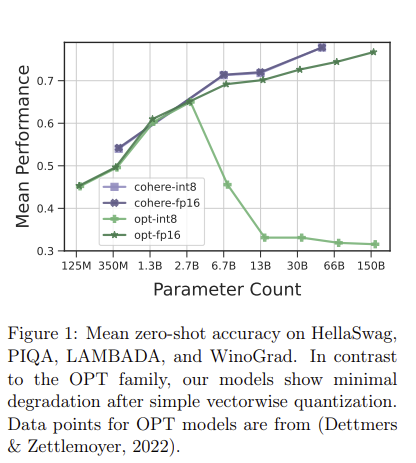

Quantization Challenges In Large Language Models Llms And The emergence of large language models (llms) has revolutionized natural language processing, delivering unprecedented performance across a variety of language tasks. however, the deployment of these models is hindered by substantial computational costs and resource demands due to their extensive size and complexity. this comprehensive review examines recent advancements in model quantization. However, addressing the challenges associated with quantization, such as accuracy degradation and sensitivity to precision changes, remains an ongoing area of research. as advancements in quantization techniques continue, we can anticipate further improvements in the efficiency and accessibility of large language models. Breakthroughs in natural language processing (nlp) by large scale language models (llms) have led to superior performance in multilingual tasks such as translation, summarization, and q&a. however, the size and complexity of these models raise challenges in terms of computational requirements, memory usage, and energy consumption. quantization strategies, as a type of model compression. Quantization methods for llms there are several quantization methods available for large language models (llms), each with unique advantages and challenges. below, we outline some popular options, including details on their performance, memory requirements, adaptability, and adoption.

Quantization Techniques Demystified Boosting Efficiency In Large Breakthroughs in natural language processing (nlp) by large scale language models (llms) have led to superior performance in multilingual tasks such as translation, summarization, and q&a. however, the size and complexity of these models raise challenges in terms of computational requirements, memory usage, and energy consumption. quantization strategies, as a type of model compression. Quantization methods for llms there are several quantization methods available for large language models (llms), each with unique advantages and challenges. below, we outline some popular options, including details on their performance, memory requirements, adaptability, and adoption. In essence, quantization serves as a powerful optimization technique that addresses several key challenges associated with deploying and running large language models. Learn how quantization can reduce the size of large language models for efficient ai deployment on everyday devices. follow our step by step guide now!.

Large Language Models Llms Tutorial Workshop Argonne National In essence, quantization serves as a powerful optimization technique that addresses several key challenges associated with deploying and running large language models. Learn how quantization can reduce the size of large language models for efficient ai deployment on everyday devices. follow our step by step guide now!.

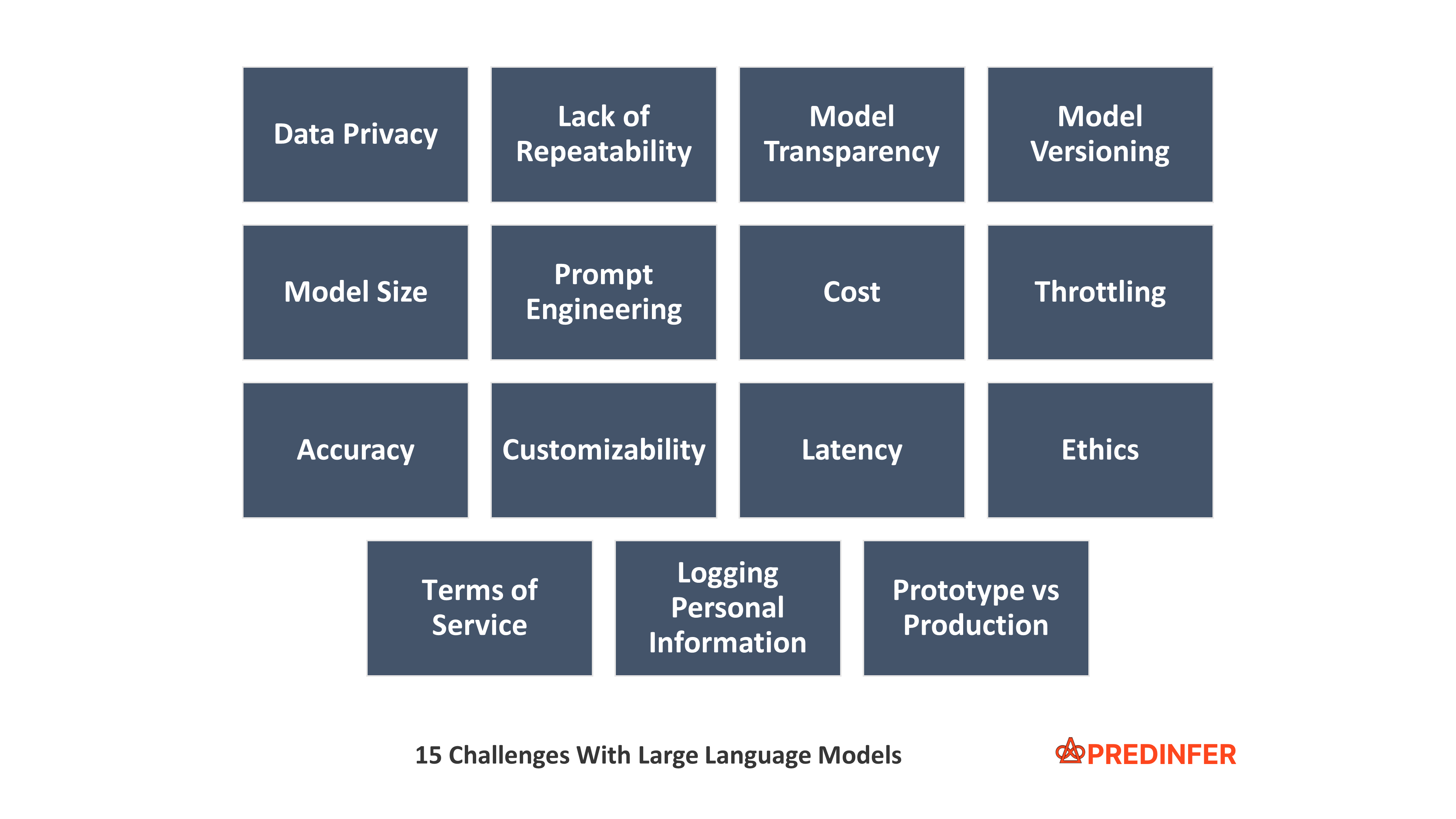

15 Challenges With Large Language Models Llms Blog

Fine Tuning Large Language Models Llms Using 4bit Quantization By

Large Language Models Llms Challenges Saif Islam Medium