Quantization Tech Of Llms Gguf We Can Use Gguf To Offload Any Layer Of Quantizing llms how & why (8 bit, 4 bit, gguf & more) adam lucek 14.3k subscribers 315. An example of quantizing a tensor of 32 bit floats to 8 bit ints with the addition of double quantization to then quantize the newly introduced scaling factors from 32 bit floats to 8 bit floats.

Quantization Tech Of Llms Gguf We Can Use Gguf To Offload Any Layer Of Yeehaw, y'all 🤠 i've been pondering a lot about quantization and its impact on large language models (llms). as you all may know, quantization techniques like 4 bit and 8 bit quantization have been a boon for us consumers, allowing us to run larger models than our hardware would typically be able to handle. however, it's clear that there has to be a trade off. quantization essentially. In the context of large language models (llms), quantization transforms 32 bit floating point parameters into more compact representations like 8 bit or 4 bit integers, enabling efficient deployment in resource constrained environments. 더불어, 8비트 및 4비트와 같은 낮은 정밀도의 데이터로 모델을 변환해도 성능이 **상당히 유지**된다는 사실을 보여줍니다. 이렇게 하면 여러 모델이 보다 쉽게 접근 가능해지며, 이를 통해 다양한 장치에서 llms를 활용할 수 있습니다. To store a neural network in memory, you can use 16 bit floats or 8 bit floats, with 8 bit floats taking up less space in memory. the process of quantization attempts to reduce the memory size of llms while maintaining an acceptable level of performance and accuracy, and as you will see, it's a bit more complicated than just casting.

What Are Quantized Llms 더불어, 8비트 및 4비트와 같은 낮은 정밀도의 데이터로 모델을 변환해도 성능이 **상당히 유지**된다는 사실을 보여줍니다. 이렇게 하면 여러 모델이 보다 쉽게 접근 가능해지며, 이를 통해 다양한 장치에서 llms를 활용할 수 있습니다. To store a neural network in memory, you can use 16 bit floats or 8 bit floats, with 8 bit floats taking up less space in memory. the process of quantization attempts to reduce the memory size of llms while maintaining an acceptable level of performance and accuracy, and as you will see, it's a bit more complicated than just casting. Gguf (gpt generated unified format), meanwhile, is a successor to ggml and is designed to address its limitations – most notably, enabling the quantization of non llama models. gguf is also extensible: allowing for the integration of new features while retaining compatibility with older llms. Gguf is central to the quantization process itself, providing robust support for various quantization levels, typically ranging from 2 bit to 8 bit precision. the general workflow involves taking an original, full precision llm (like a llama model), converting it into the gguf format, and then applying a specific quantization level to this gguf.

What Are Quantized Llms Gguf (gpt generated unified format), meanwhile, is a successor to ggml and is designed to address its limitations – most notably, enabling the quantization of non llama models. gguf is also extensible: allowing for the integration of new features while retaining compatibility with older llms. Gguf is central to the quantization process itself, providing robust support for various quantization levels, typically ranging from 2 bit to 8 bit precision. the general workflow involves taking an original, full precision llm (like a llama model), converting it into the gguf format, and then applying a specific quantization level to this gguf.

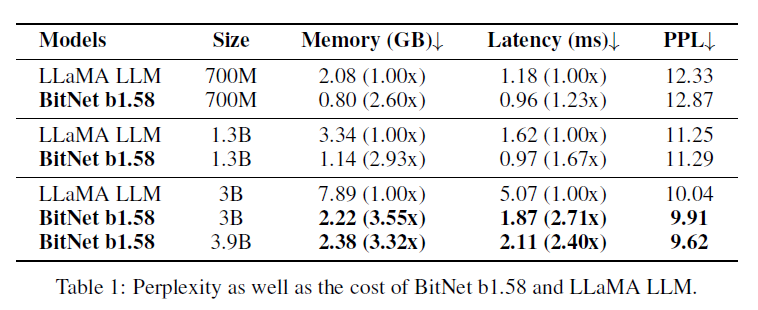

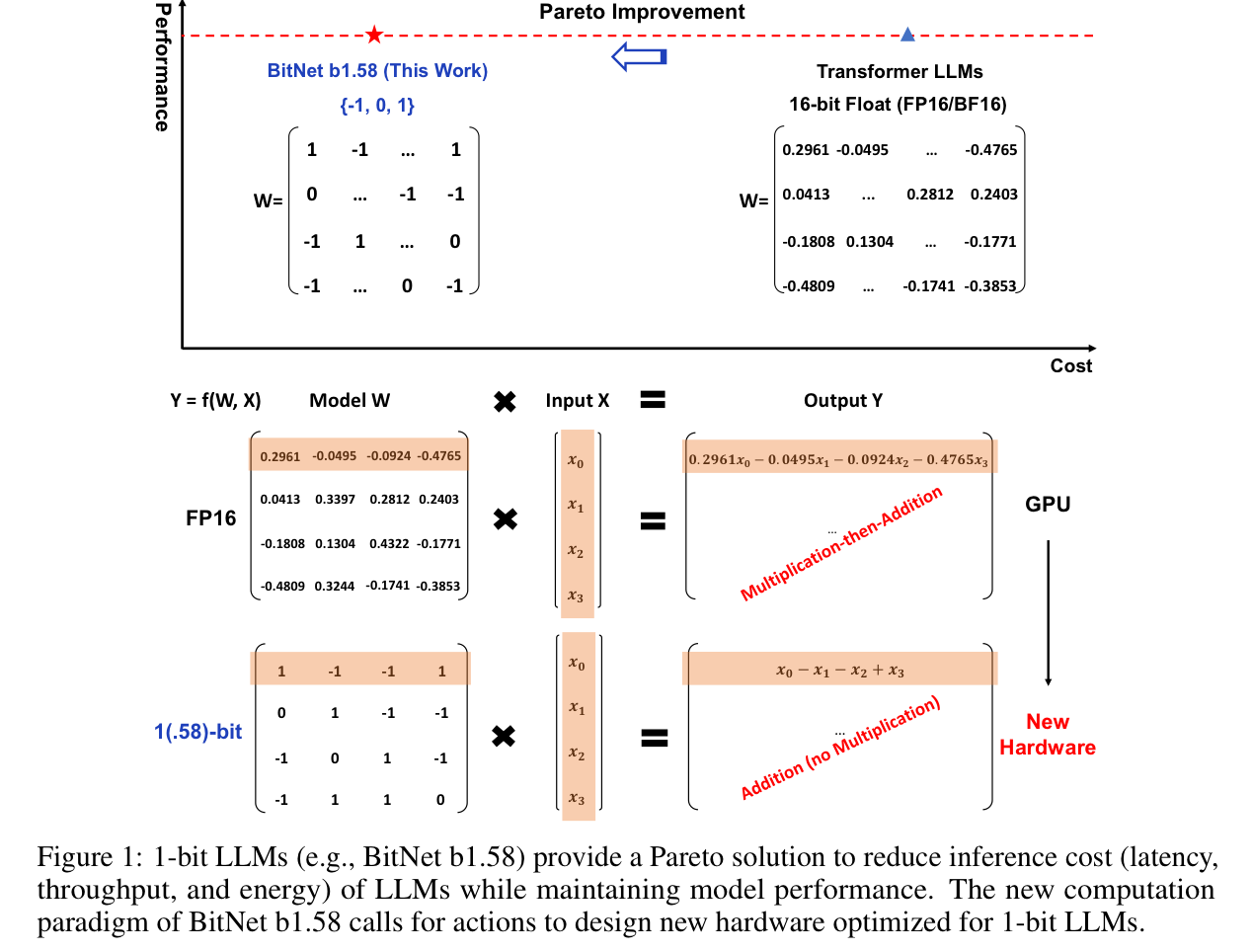

The Era Of 1 Bit Llms All Llms Are In 1 58 Bits

The Era Of 1 Bit Llms All Llms Are In 1 58 Bits

How To Run Llms On Cpu Based Systems Unfoldai