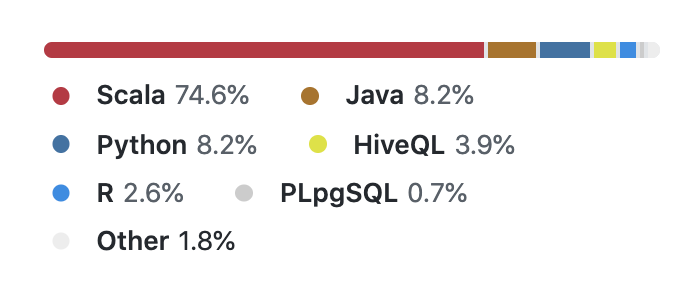

Scala Spark Vs Python Pyspark Which Is Better Mungingdata Spark is an awesome framework and the scala and python apis are both great for most workflows. pyspark is more popular because python is the most popular language in the data community. In conclusion, choosing between scala and pyspark for parallel processing in spark depends on your specific requirements and priorities.

Scala Spark Vs Python Pyspark Which Is Better Mungingdata Pandas udf is much better choice when compare to python udf which use apache arrow to optimize the data transfer process and in case of databricks, pyspark. but this again causes data to be moved between python process and jvm. so, whenever possible avoiding udf is the best way. if it cannot be avoided, scala udf > pandas udf > python udf. Pyspark pyspark is a python based api used for the spark implementation and is written in scala programming language. basically, to support python with spark, the apache spark community released a tool, pyspark. with pyspark, one can work with rdds in a python programming language also as it contains a library called py4j for this. The python vs. scala api debate in pyspark comes down to your goals—python for ease and exploration, scala for speed and production. pyspark’s python layer trades some of spark’s raw performance for accessibility, while scala taps directly into spark’s power. Scripting and notebooks — python is well suited for scripting, and jupyter notebooks are popular for interactive data analysis and visualization. scala with spark performance — scala, being a statically typed language, can often provide better performance and optimization opportunities compared to python.

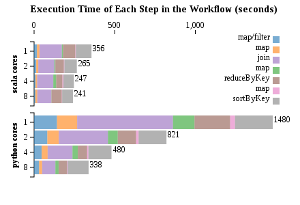

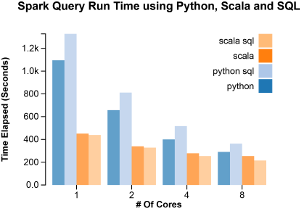

Scala Spark Vs Python Pyspark Which Is Better Mungingdata The python vs. scala api debate in pyspark comes down to your goals—python for ease and exploration, scala for speed and production. pyspark’s python layer trades some of spark’s raw performance for accessibility, while scala taps directly into spark’s power. Scripting and notebooks — python is well suited for scripting, and jupyter notebooks are popular for interactive data analysis and visualization. scala with spark performance — scala, being a statically typed language, can often provide better performance and optimization opportunities compared to python. I will explain the differences between scala and pyspark to better understand which language is better for you. Scala is faster than python due to its static type language. spark is native in scala, hence making writing spark jobs in scala the native way. pyspark is converted to spark sql and then executed on a java virtual machine (jvm) cluster. it is not a traditional python execution environment.

Scala Spark Vs Python Pyspark Which Is Better Mungingdata I will explain the differences between scala and pyspark to better understand which language is better for you. Scala is faster than python due to its static type language. spark is native in scala, hence making writing spark jobs in scala the native way. pyspark is converted to spark sql and then executed on a java virtual machine (jvm) cluster. it is not a traditional python execution environment.

Python Vs Scala Vs Spark

Python Vs Scala Vs Spark

Python Vs Scala For Spark Jobs Stack Overflow