Stream Processing With Apache Flink And Dc Os D2iq Stream processing with apache flink and dc os aug 31, 2017 joerg schad d2iq most modern enterprise apps require extremely fast data processing, and event stream processing with apache flink is a popular way to reduce latency for time sensitive computations, like processing credit card transactions. Leverage the flink testkit for streaming test execution use the print() method within tasks to debug output step 7: conclusion apache flink is a powerful tool for real time data processing. by following this tutorial, you have gained hands on experience with building a real time data processing system using apache flink.

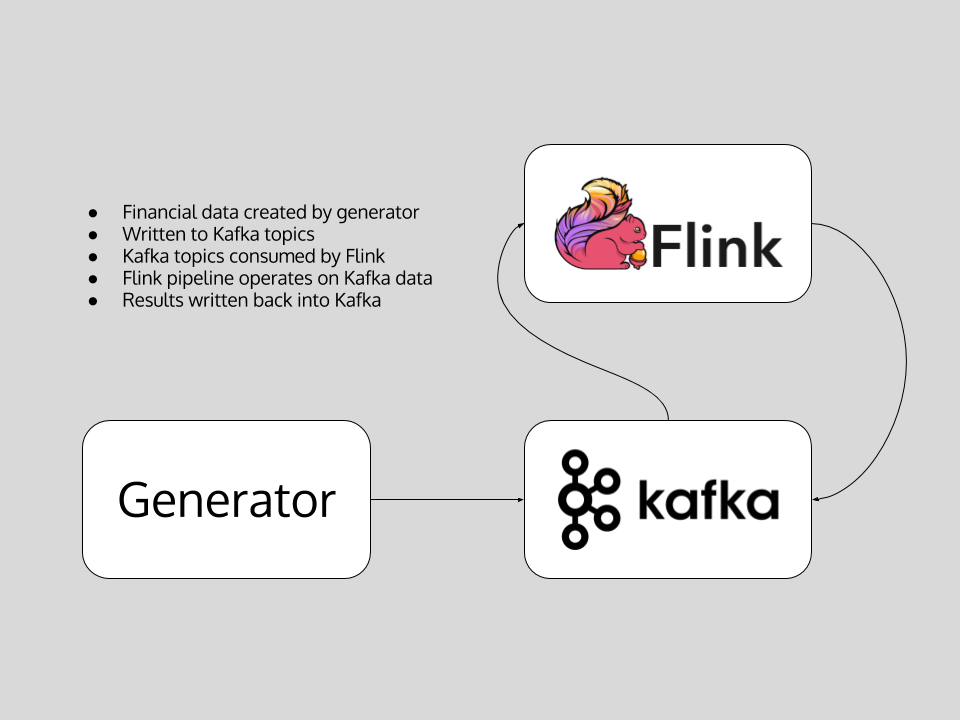

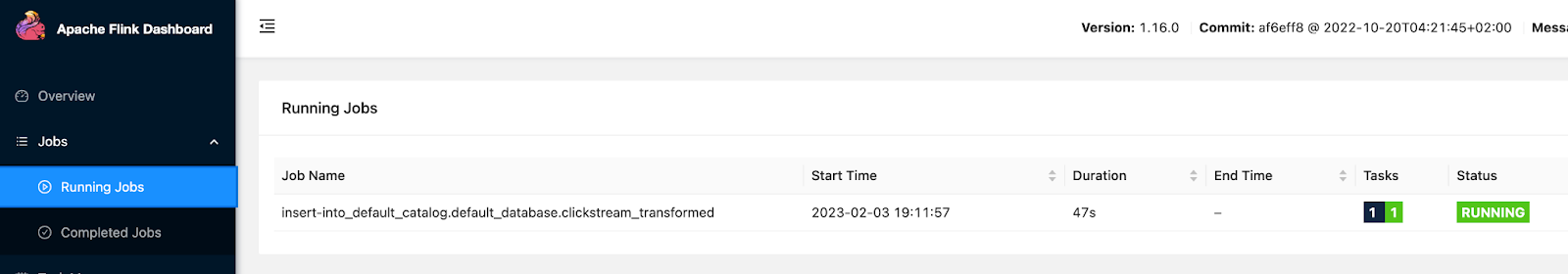

Stream Processing With Apache Flink And Dc Os D2iq Using apache flink for real time stream processing in data engineering # devops # software # technology # trending businesses need to process data as it comes in, rather than waiting for it to be collected and analyzed later. this is called real time data processing, and it allows companies to make quick decisions based on the latest information. Flink learning books stream processing with apache flink.pdf cannot retrieve latest commit at this time. Flink basics flink is a tool specialized in processing streaming data. it’s based on the simple concept of sources, sinks and processors. Stream processing: as we require fast response times, we use apache flink as a stream processor running the financialtransactionjob. storage: here we diverge a bit from the typical smack stack setup and don’t write the results into a datastore such as apache cassandra. instead we write the results again into a kafka stream (default: ‘fraud’).

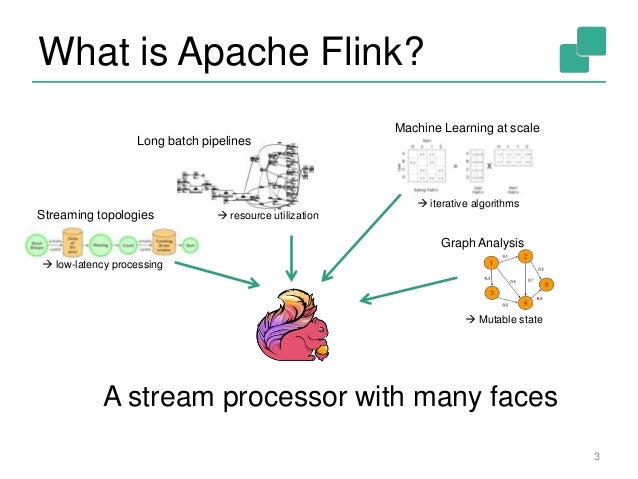

Getting Started Stream Processing Using Apache Flink And Redpanda Flink basics flink is a tool specialized in processing streaming data. it’s based on the simple concept of sources, sinks and processors. Stream processing: as we require fast response times, we use apache flink as a stream processor running the financialtransactionjob. storage: here we diverge a bit from the typical smack stack setup and don’t write the results into a datastore such as apache cassandra. instead we write the results again into a kafka stream (default: ‘fraud’). Apache flink is a distributed stream processor with intuitive and expressive apis to implement stateful stream processing applications. it efficiently runs such applica‐ tions at large scale in a fault tolerant manner. This post is the first of a series of blog posts on flink streaming, the recent addition to apache flink that makes it possible to analyze continuous data sources in addition to static files. flink streaming uses the pipelined flink engine to process data streams in real time and offers a new api including definition of flexible windows. in this post, we go through an example that uses the.

Data Stream Processing With Apache Flink Apache flink is a distributed stream processor with intuitive and expressive apis to implement stateful stream processing applications. it efficiently runs such applica‐ tions at large scale in a fault tolerant manner. This post is the first of a series of blog posts on flink streaming, the recent addition to apache flink that makes it possible to analyze continuous data sources in addition to static files. flink streaming uses the pipelined flink engine to process data streams in real time and offers a new api including definition of flexible windows. in this post, we go through an example that uses the.

Stream Processing Apache Flink Ppt