Tokenization In Nlp Methods Types And Challenges Explore tokenization and learn about one of the key pieces of natural language processing. plus, learn about tokenization uses across professional industries and how to decide whether tokenization is the right nlp method for your task. Tokenization is a foundation step in nlp pipeline that shapes the entire workflow. involves dividing a string or text into a list of smaller units known as tokens. uses a tokenizer to segment unstructured data and natural language text into distinct chunks of information, treating them as different elements.

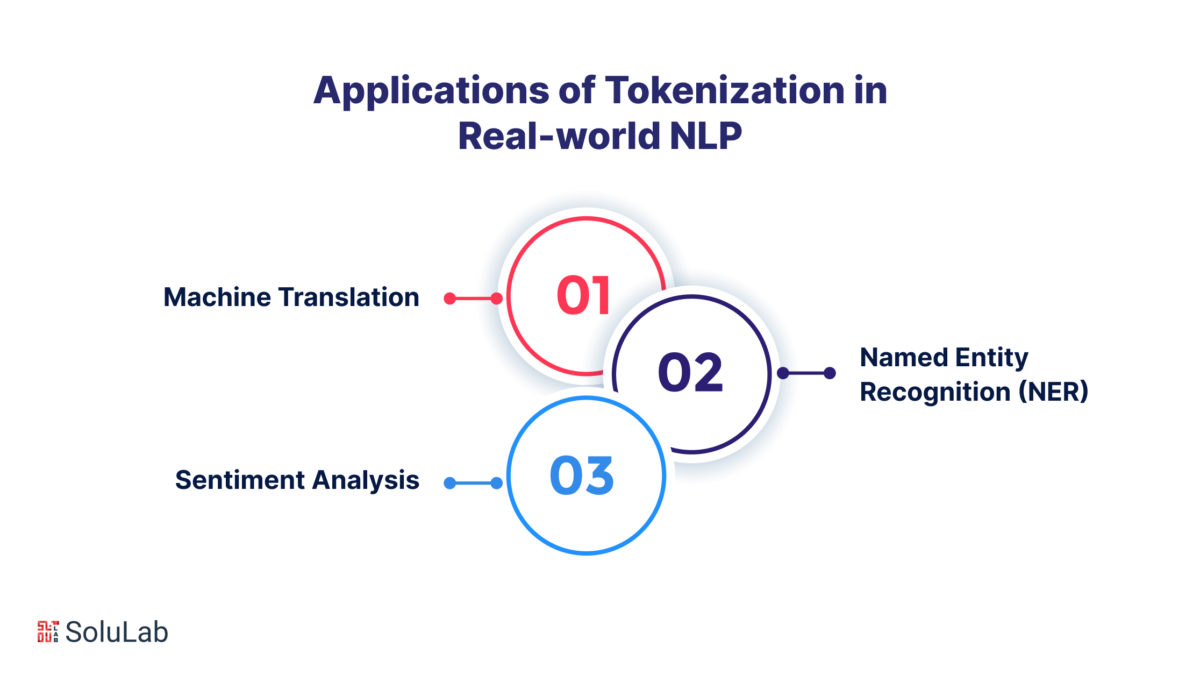

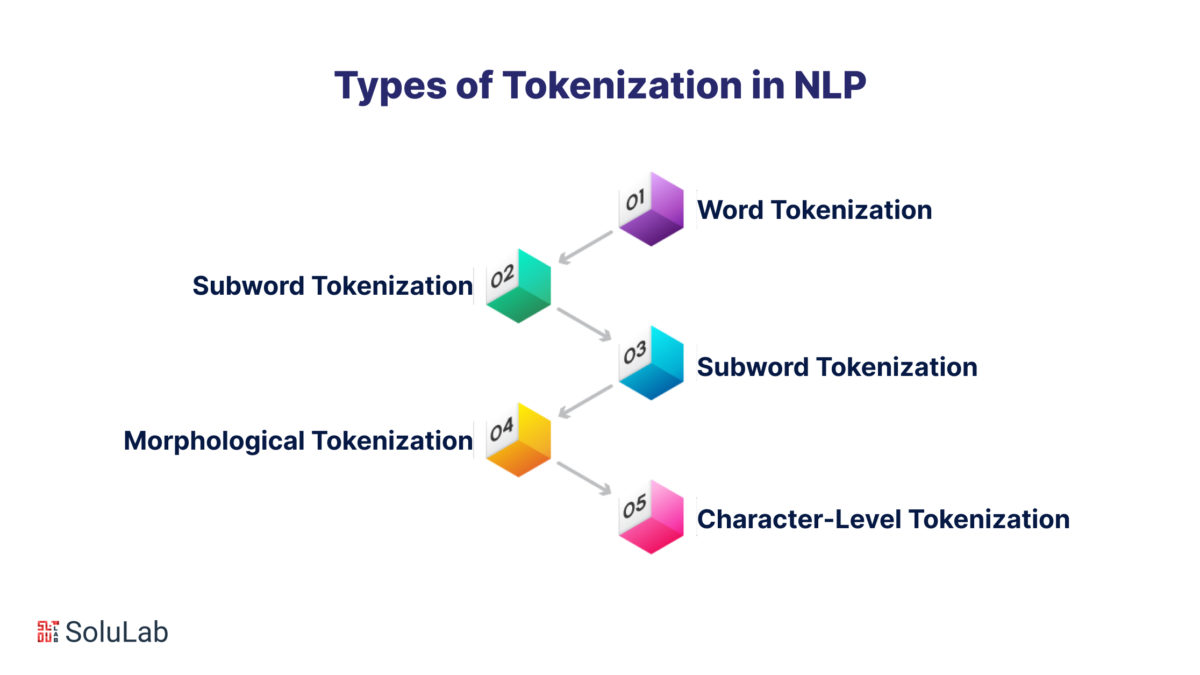

Tokenization In Nlp Methods Types And Challenges Tokenization is a foundational concept in natural language processing (nlp), a branch of artificial intelligence that enables machines to understand and process human language. This course offers a clear pathway to undertsand advanced tokenization and sentiment analysis—two core pillars of modern nlp. you'll learn how to convert raw text into structured input using subword, character level, and adaptive tokenization techniques, and how to extract sentiment using rule based, statistical, and deep learning models. Tokenization is essential in many nlp activities since it helps the system digest and access text in smaller chunks. tokenization allows nlp tasks like text, classification, praising, translation, and part of speech tagging. when tokenization is done incorrectly, it becomes much harder to understand the structure and meaning of language. Tokenization is a critical step in natural language processing, serving as the foundation for many text analysis and machine learning tasks. by breaking down text into manageable units, tokenization simplifies the processing of textual data, enabling more effective and accurate nlp applications.

Tokenization In Nlp Methods Types And Challenges Tokenization is essential in many nlp activities since it helps the system digest and access text in smaller chunks. tokenization allows nlp tasks like text, classification, praising, translation, and part of speech tagging. when tokenization is done incorrectly, it becomes much harder to understand the structure and meaning of language. Tokenization is a critical step in natural language processing, serving as the foundation for many text analysis and machine learning tasks. by breaking down text into manageable units, tokenization simplifies the processing of textual data, enabling more effective and accurate nlp applications. Tokenization is a preprocessing technique used in natural language processing (nlp). nlp tools generally process text in linguistic units, such as words, clauses, sentences and paragraphs. This course offers a clear pathway to undertsand advanced tokenization and sentiment analysis—two core pillars of modern nlp. you'll learn how to convert raw text into structured input using subword, character level, and adaptive tokenization techniques, and how to extract sentiment using rule based, statistical, and deep learning models.

An Overview Of Tokenization Algorithms In Nlp 101 Blockchains Tokenization is a preprocessing technique used in natural language processing (nlp). nlp tools generally process text in linguistic units, such as words, clauses, sentences and paragraphs. This course offers a clear pathway to undertsand advanced tokenization and sentiment analysis—two core pillars of modern nlp. you'll learn how to convert raw text into structured input using subword, character level, and adaptive tokenization techniques, and how to extract sentiment using rule based, statistical, and deep learning models.