Unigram Unigram The unigram algorithm is used in combination with sentencepiece, which is the tokenization algorithm used by models like albert, t5, mbart, big bird, and xlnet. sentencepiece addresses the fact that not all languages use spaces to separate words. instead, sentencepiece treats the input as a raw input stream which includes the space in the set of characters to use. then it can use the unigram. Byte pair encoding (bpe) is a deterministic, frequency based tokenization method. it begins with characters and iteratively merges the most frequent adjacent symbol pairs to form longer and more.

Github Unigramdev Unigram Telegram For Windows 5 i have been trying to understand how the unigram tokenizer works since it is used in the sentencepiece tokenizer that i am planning on using, but i cannot wrap my head around it. i tried to read the original paper, which contains so little details that it feels like it's been written explicitely not to be understood. Wordpiece algorithm with the release of bert in 2018, there came a new subword tokenization algorithm called wordpiece which can be considered an intermediary of bpe and unigram algorithms. Unigram relevant source files purpose and overview this document covers the unigram model implementation in the tokenizers library, one of the core tokenization algorithms provided alongside bpe and wordpiece. the unigram model is a statistical subword tokenization algorithm that originated as part of google's sentencepiece toolkit, using a probabilistic approach to determine the best. This video will teach you everything there is to know about the unigram algorithm for tokenization. how it's trained on a text corpus and how it's applied to.

Broken Unigram Issue 492 Unigramdev Unigram Github Unigram relevant source files purpose and overview this document covers the unigram model implementation in the tokenizers library, one of the core tokenization algorithms provided alongside bpe and wordpiece. the unigram model is a statistical subword tokenization algorithm that originated as part of google's sentencepiece toolkit, using a probabilistic approach to determine the best. This video will teach you everything there is to know about the unigram algorithm for tokenization. how it's trained on a text corpus and how it's applied to. We‘ll start by establishing why tokenization matters in nlp. then we‘ll dig into the technical details of byte pair encoding (bpe), wordpiece, and unigram tokenizers, including step by step code samples to train them from scratch. you‘ll also learn best practices i‘ve gathered over the years on fine tuning and applying tokenizers to downstream tasks. so let‘s get hands on with. Unigram tokenization install the transformers, datasets, and evaluate libraries to run this notebook.

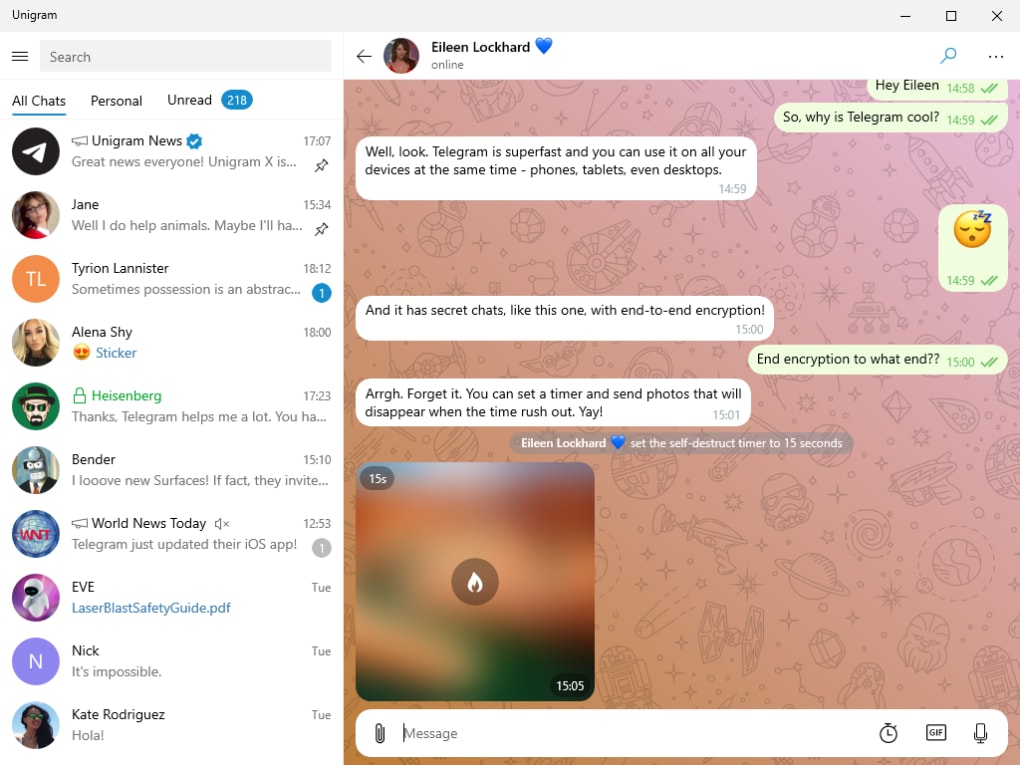

Unigram Download We‘ll start by establishing why tokenization matters in nlp. then we‘ll dig into the technical details of byte pair encoding (bpe), wordpiece, and unigram tokenizers, including step by step code samples to train them from scratch. you‘ll also learn best practices i‘ve gathered over the years on fine tuning and applying tokenizers to downstream tasks. so let‘s get hands on with. Unigram tokenization install the transformers, datasets, and evaluate libraries to run this notebook.

Question Is It Possible To Completely Block Remove The Unigram News

Unigram Does Not Show Popup For Restricted Accounts Issue 2972

Unigram Does Not Show Popup For Restricted Accounts Issue 2972