Nvidia S Huge Ai Breakthrough Just Changed Everything Supercut Discover how nvidia is leading the charge in optimizing pytorch for scalability and performance, with a dedicated team of experts. explore the industry's shi. Training labs kernel optimization for ai and beyond: unlocking the power of nsight compute felix schmitt, sr. system software engineer, nvidia peter labus, senior system software engineer, nvidia learn how to unlock the full potential of nvidia gpus with the powerful profiling and analysis capabilities of nsight compute.

Unlocking The Power Of Ai Netgen As detailed in this post, the two grace hopper superchip design of gh200 nvl2 works in concert with high performance, low latency spectrum x networking to unlock an unprecedentedly efficient path to developing ai. These gpus have been designed for high performance computing workloads and advanced ai applications. the a6000, aimed at professionals requiring substantial gpu power, and the a100, built with data centres in mind, represent the cutting edge of current gpu technology. One of the most brag worthy features to come out of the pytorch 2.0. release earlier this year was the ‘torchinductor’ compiler backend that can automatically perform operator fusion, which resulted in 30 200% runtime performance improvements for some users. Hey there! are you looking to speed up your pytorch deep learning models? harnessing the massive parallel processing power of cuda enabled gpus could be the answer. in this hands on guide, you‘ll learn: why nvidia gpus are purpose built for accelerating ai workloads how to enable cuda gpu support for your pytorch code techniques to optimize and […].

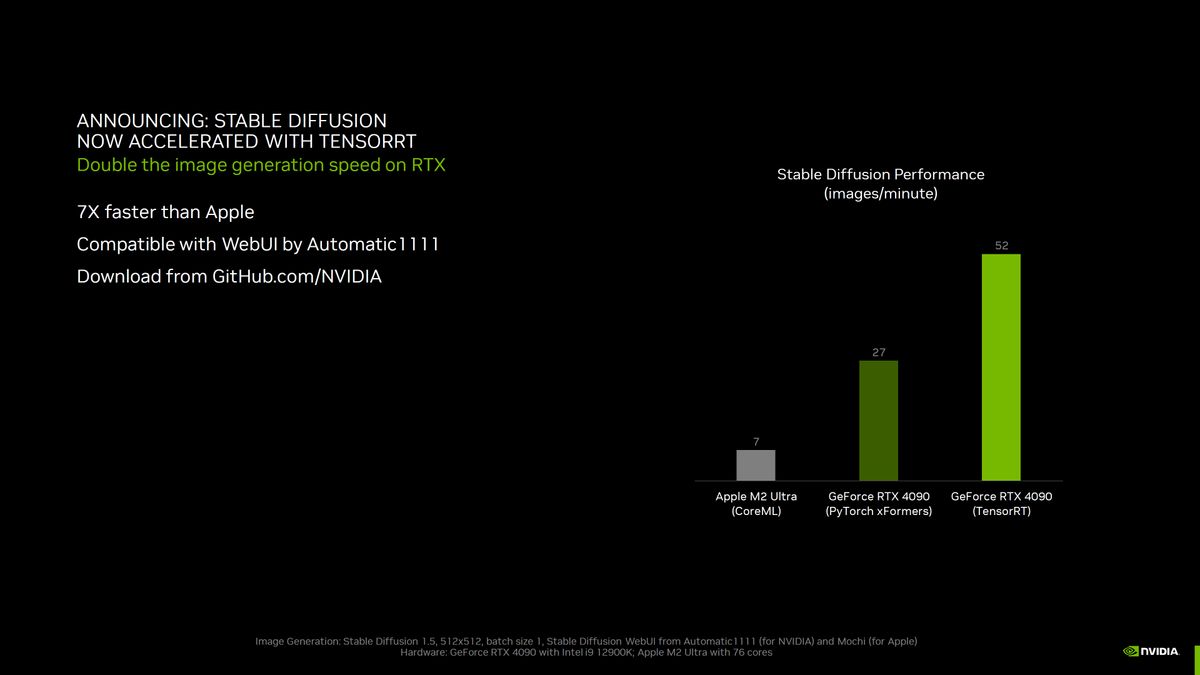

Nvidia Boosts Ai Performance With Tensorrt Tom S Hardware One of the most brag worthy features to come out of the pytorch 2.0. release earlier this year was the ‘torchinductor’ compiler backend that can automatically perform operator fusion, which resulted in 30 200% runtime performance improvements for some users. Hey there! are you looking to speed up your pytorch deep learning models? harnessing the massive parallel processing power of cuda enabled gpus could be the answer. in this hands on guide, you‘ll learn: why nvidia gpus are purpose built for accelerating ai workloads how to enable cuda gpu support for your pytorch code techniques to optimize and […]. Discover the performance comparison between pytorch on apple silicon and nvidia gpus. explore the capabilities of m1 max and m1 ultra chips for machine learning projects on mac devices. Boost pytorch performance with nvidia gpus: optimize models, reduce training time, and unlock ai insights with expert gpu acceleration tips.

Nvidia Boosts Ai Performance With Tensorrt Tom S Hardware Discover the performance comparison between pytorch on apple silicon and nvidia gpus. explore the capabilities of m1 max and m1 ultra chips for machine learning projects on mac devices. Boost pytorch performance with nvidia gpus: optimize models, reduce training time, and unlock ai insights with expert gpu acceleration tips.