Understanding Llm Context Window And Working Matter Ai Blog The “context window” of an llm refers to the maximum amount of text, measured in tokens (or sometimes words), that the model can process in a single input. it’s a crucial limitation because. Understanding these concepts is key to optimizing llm performance, whether you're training a new model or working with existing ones. as the field of natural language processing continues to evolve, future innovations may focus on improving how models handle tokens and context windows to create even more powerful and efficient llms.

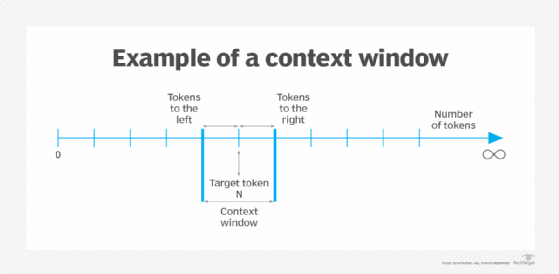

Understanding Llm Context Window And Working Matter Ai Blog What is llm context window and how it works. Let’s take a step back. what the heck is a context window? understanding context windows alright, so now that we sort of know what a token is, let’s talk about context windows. the context window is what the llm uses to keep track of the input prompt (what you enter) and the output (generated text). First, what is a context window? and how does it relate to effectively coding with cursor? to zoom out a bit, a large language model (llm) is an artificial intelligence model trained to predict and generate text by learning patterns from massive datasets. it powers tools like cursor by understanding your input and suggesting code or text based on what it’s seen before. tokens are the inputs. An llm’s context window can be thought of as the equivalent of its working memory. it determines how long of a conversation it can carry out without forgetting details from earlier in the exchange. it also determines the maximum size of documents or code samples that it can process at once. when a prompt, conversation, document or code base exceeds an artificial intelligence model’s.

Pose Efficient Context Window Extension Of Llms Via Positional Skip First, what is a context window? and how does it relate to effectively coding with cursor? to zoom out a bit, a large language model (llm) is an artificial intelligence model trained to predict and generate text by learning patterns from massive datasets. it powers tools like cursor by understanding your input and suggesting code or text based on what it’s seen before. tokens are the inputs. An llm’s context window can be thought of as the equivalent of its working memory. it determines how long of a conversation it can carry out without forgetting details from earlier in the exchange. it also determines the maximum size of documents or code samples that it can process at once. when a prompt, conversation, document or code base exceeds an artificial intelligence model’s. Understanding context windows in llms ever wondered why the prompt size is limited when prompting an ai model, or why it seems to forget what it was told earlier in the interactions? context windows provide working memory to ai. The context window remains a fundamental bottleneck, but it's also a vibrant area of research. understanding its implications is essential for effectively interacting with llms and designing applications that leverage their strengths while working around their inherent "short term memory" limitations.

Monitoring The Context Window In Llm Applications Understanding context windows in llms ever wondered why the prompt size is limited when prompting an ai model, or why it seems to forget what it was told earlier in the interactions? context windows provide working memory to ai. The context window remains a fundamental bottleneck, but it's also a vibrant area of research. understanding its implications is essential for effectively interacting with llms and designing applications that leverage their strengths while working around their inherent "short term memory" limitations.

Llm Context Window Paradox 5 Ways To Solve The Problem

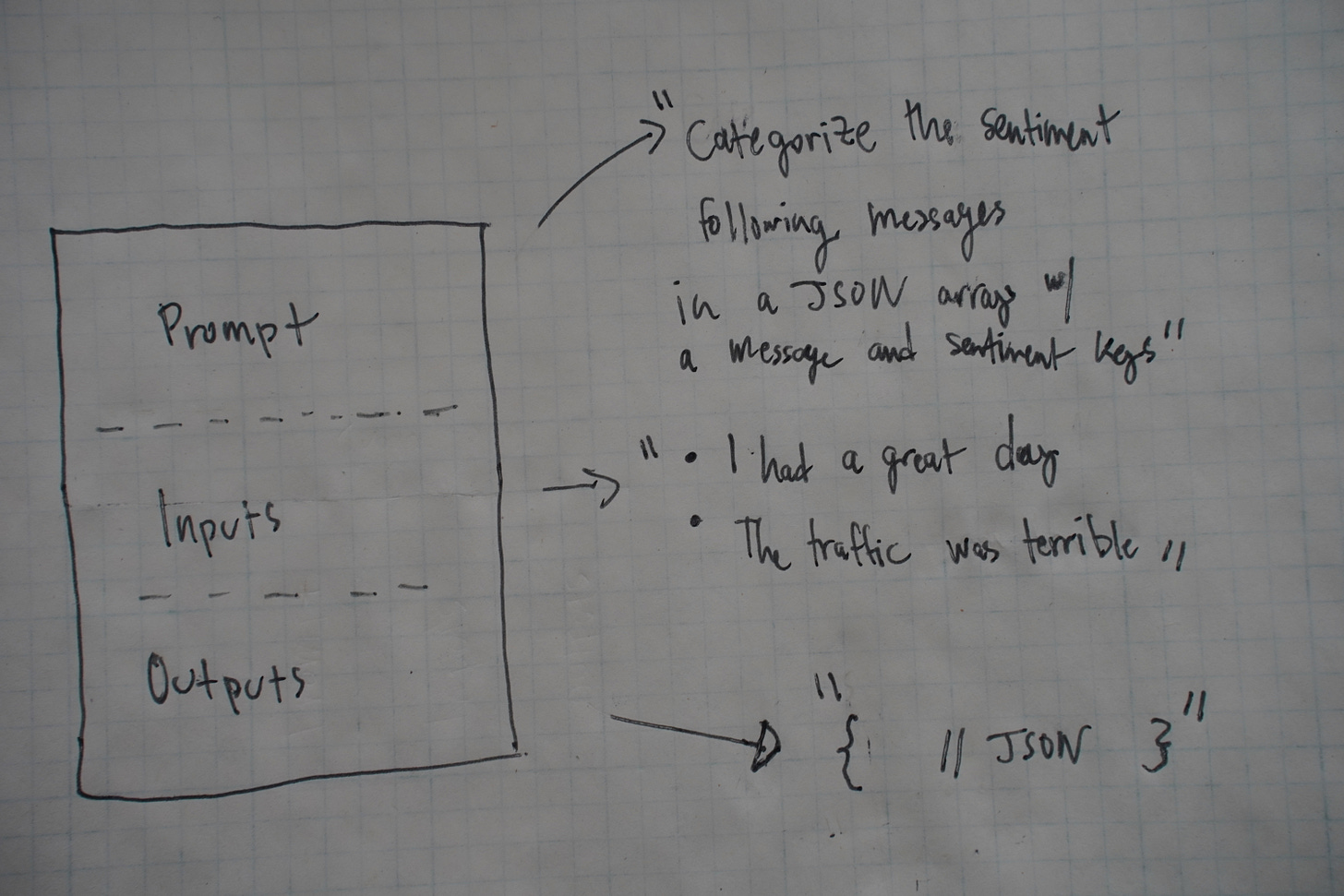

Llm Engineering Context Windows By Darlin