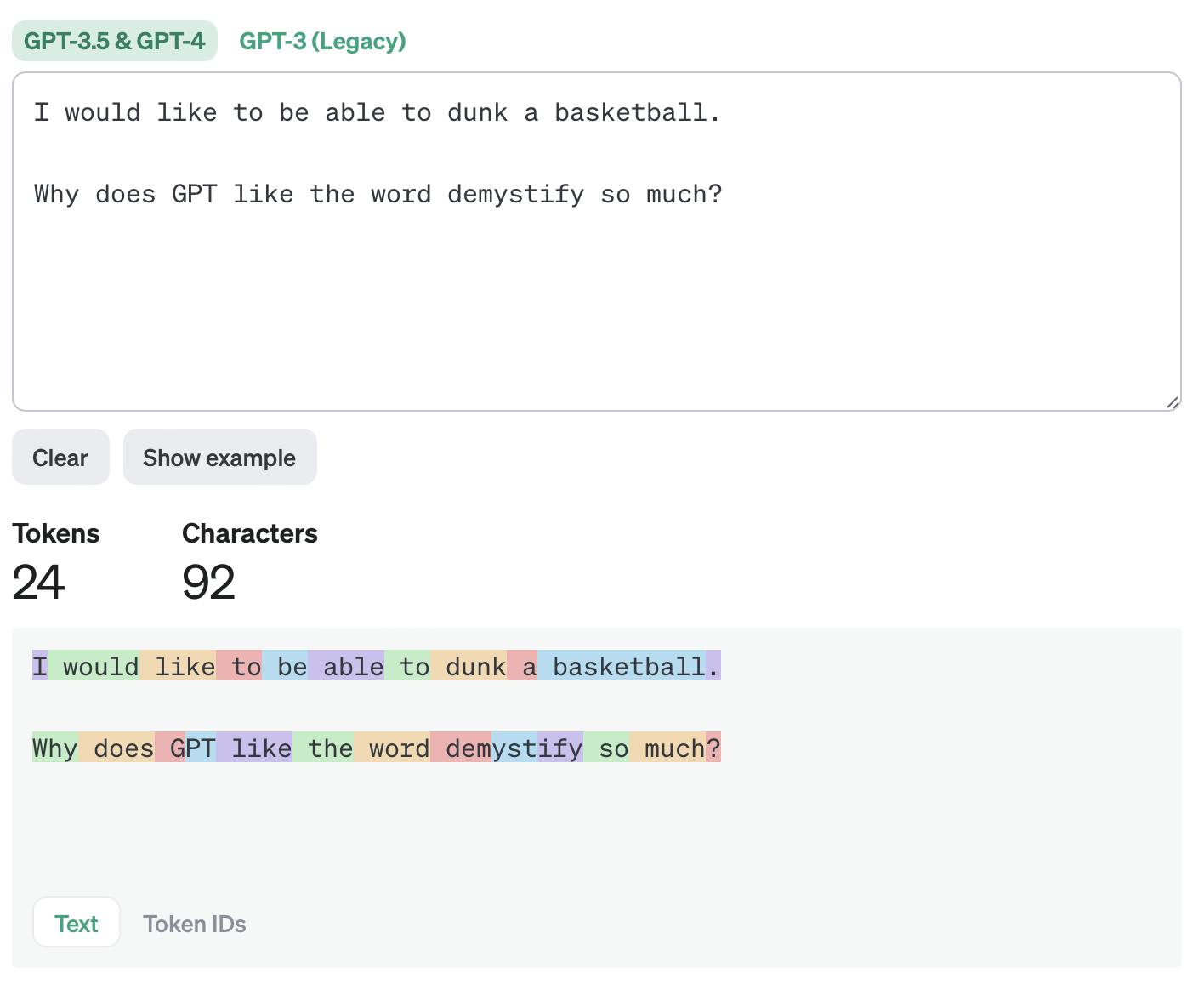

Tokenization Unveiled How Ai Understands Human Language Conclusion tokenization is a foundational step in nlp that prepares text data for computational models. by understanding and implementing appropriate tokenization strategies, we enable models to process and generate human language more effectively, setting the stage for advanced topics like word embeddings and language modeling. It helps in improving interpretability of text by different models. let's understand how tokenization works. representation of tokenization what is tokenization in nlp? natural language processing (nlp) is a subfield of artificial intelligence, information engineering, and human computer interaction.

Tokenization Unveiled How Ai Understands Human Language Tokenization is the foundational process that enables large language models (llms) to understand and generate human language. by breaking text into smaller units (tokens), tokenization bridges the gap between raw text and numerical representations that machines can process. Explore the concept of tokenization in ai, including its applications, various types, and the challenges it presents in natural language processing. The tokenization process has been essential to developing these new generation intelligence models. modern architectures like transformers easily process these and produce excellent results. this article will discuss ai tokens in detail. we will understand how a token is defined and its role in llms. In the rapidly evolving world of artificial intelligence, understanding the fundamental concepts behind large language models (llms) is essential. one such concept is tokenization, a critical step in how llms process and understand text. this article breaks down what tokenization is, why it matters, and how it impacts the capabilities of ai across various languages and applications. by diving.

Extensible Tokenization Revolutionizing Context Understanding In Large The tokenization process has been essential to developing these new generation intelligence models. modern architectures like transformers easily process these and produce excellent results. this article will discuss ai tokens in detail. we will understand how a token is defined and its role in llms. In the rapidly evolving world of artificial intelligence, understanding the fundamental concepts behind large language models (llms) is essential. one such concept is tokenization, a critical step in how llms process and understand text. this article breaks down what tokenization is, why it matters, and how it impacts the capabilities of ai across various languages and applications. by diving. Understanding tokenization in large language models in the rapidly evolving landscape of artificial intelligence, the concept of tokenization plays a pivotal role, especially when it comes to understanding and generating human language. What is the value of ai tokenization? ai tokenization is about taking large chunks of data and breaking them down into manageable pieces, so machines can better understand human language. the process has clear benefits, like better data security and more efficient processing.

Tokenization In Large Language Models Llms A Key Consideration For Understanding tokenization in large language models in the rapidly evolving landscape of artificial intelligence, the concept of tokenization plays a pivotal role, especially when it comes to understanding and generating human language. What is the value of ai tokenization? ai tokenization is about taking large chunks of data and breaking them down into manageable pieces, so machines can better understand human language. the process has clear benefits, like better data security and more efficient processing.

Understanding Tokenization In Large Language Models Word Character