Data Tokenization Strengthening Security For Users What is tokenization in data security? in this informative video, we will break down the concept of tokenization in data security and how it plays a vital ro. What is tokenization? in data security, tokenization is the process of converting sensitive data into a nonsensitive digital replacement, called a token, that maps back to the original. tokenization can help protect sensitive information. for example, sensitive data can be mapped to a token and placed in a digital vault for secure storage.

Tokenization For Improved Data Security Main Data Security Tokenization Tokenization platform turns sensitive data into non sensitive data called tokens to increase data protection and reduce fraud risks in financial transactions. the original data is then safely stored in a centralized location for subsequent reference while businesses utilize these tokens to carry out their business activities. in addition, the tokenization platform enables merchants to adhere. Tokenization (data security) this is a simplified example of how mobile payment tokenization commonly works via a mobile phone application with a credit card. [1][2] methods other than fingerprint scanning or pin numbers can be used at a payment terminal. You’re not just keeping up with security standards; you’re setting the pace. in summary, data tokenization protects your app by making secure transactions the default – reducing risk, building trust, and paving the way for growth unhampered by security woes. Tokenization is a data security technique that involves replacing sensitive data with non sensitive equivalents called tokens. these tokens have no inherent meaning or value, making them useless to unauthorized individuals. they cannot be reverse engineered to reveal the original data, providing an extra layer of protection against data breaches. in tokenization systems, cryptographic.

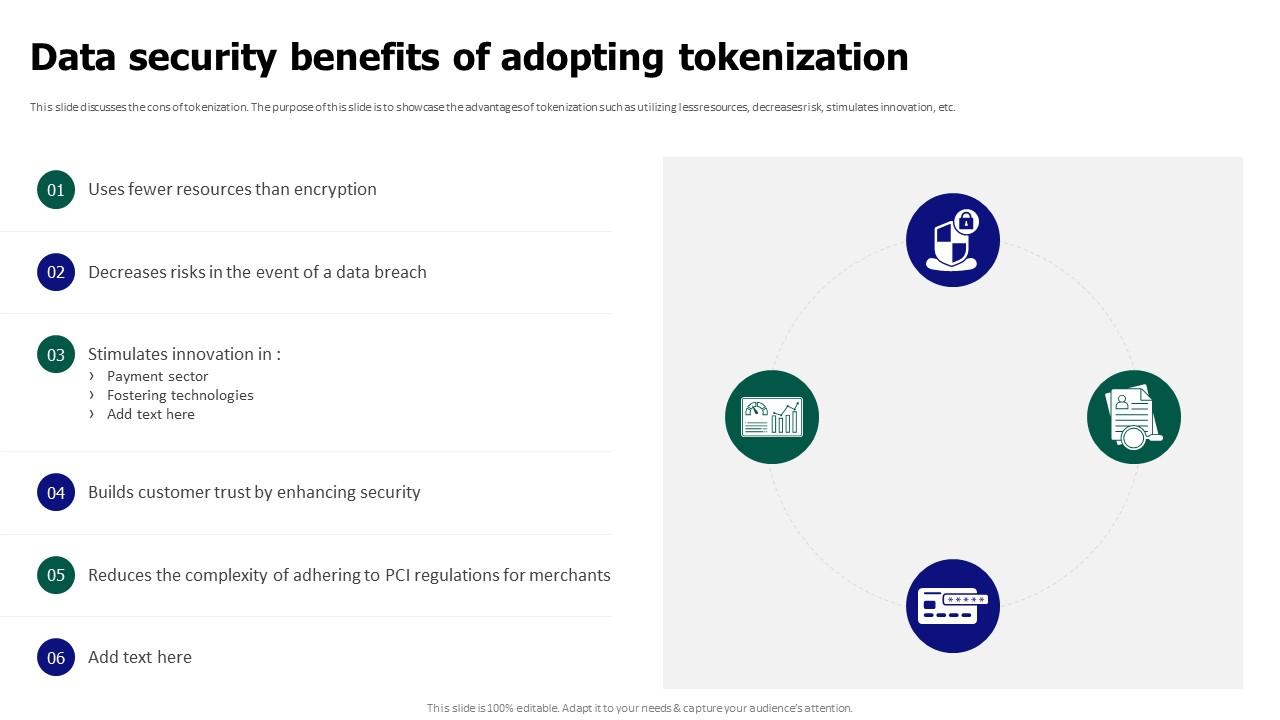

Tokenization For Improved Data Security Data Security Benefits Of You’re not just keeping up with security standards; you’re setting the pace. in summary, data tokenization protects your app by making secure transactions the default – reducing risk, building trust, and paving the way for growth unhampered by security woes. Tokenization is a data security technique that involves replacing sensitive data with non sensitive equivalents called tokens. these tokens have no inherent meaning or value, making them useless to unauthorized individuals. they cannot be reverse engineered to reveal the original data, providing an extra layer of protection against data breaches. in tokenization systems, cryptographic. Tokenization has emerged as a crucial technique for safeguarding sensitive data in today’s digital landscape. as organizations handle an increasing amount of confidential information, it is essential to implement robust security measures to protect against data breaches and ensure compliance with regulatory standards. tokenization offers a powerful solution by replacing sensitive data with. Data tokenization is a data security process that replaces sensitive data with a non sensitive value, called a token. tokens can be random numbers, strings of characters, or any other non identifiable value. when sensitive data is tokenized, the original data is stored securely in a token vault. the tokens are then used to represent the sensitive data in applications and databases.

Tokenization For Improved Data Security Characteristics Of Utility Tokenization has emerged as a crucial technique for safeguarding sensitive data in today’s digital landscape. as organizations handle an increasing amount of confidential information, it is essential to implement robust security measures to protect against data breaches and ensure compliance with regulatory standards. tokenization offers a powerful solution by replacing sensitive data with. Data tokenization is a data security process that replaces sensitive data with a non sensitive value, called a token. tokens can be random numbers, strings of characters, or any other non identifiable value. when sensitive data is tokenized, the original data is stored securely in a token vault. the tokens are then used to represent the sensitive data in applications and databases.

Tokenization For Improved Data Security Reasons For Conducting

An Overview Of Tokenization In Data Security

Tokenization For Improved Data Security Data Tokenization Tools Key